The Missing Layer: Why Enterprise Agents Need a "System of Agency"

Moving beyond "Chat with your Data" to governing the decisions your AI makes.

The Agentic Shift

We are witnessing a critical transition in artificial intelligence. The move from Generative AI (which creates content) to Agentic AI (which executes tasks) changes everything about how organizations must approach their AI infrastructure.

Most organizations are attempting to build autonomous agents on top of their existing "Systems of Record”; ERPs, CRMs, and legacy databases designed decades ago. These systems excel at storing state: inventory levels, customer records, transaction histories. But they were never designed to capture something equally critical: the reasoning behind decisions.

Consider a simple scenario. Your database tells you that inventory for Product X dropped to 50 units yesterday. It might even tell you that an automated reorder was triggered. But here's what it cannot tell you: Why did the system decide to reorder? What factors influenced the decision? Which policy authorized it? What alternatives were considered and rejected?

This gap becomes dangerous when you deploy autonomous agents. A database captures state (inventory is low). It does not capture intent or reasoning (we ordered more because we detected a pattern indicating an upcoming shortage, and our supply chain policy prioritizes stock availability over carrying costs for this product category).

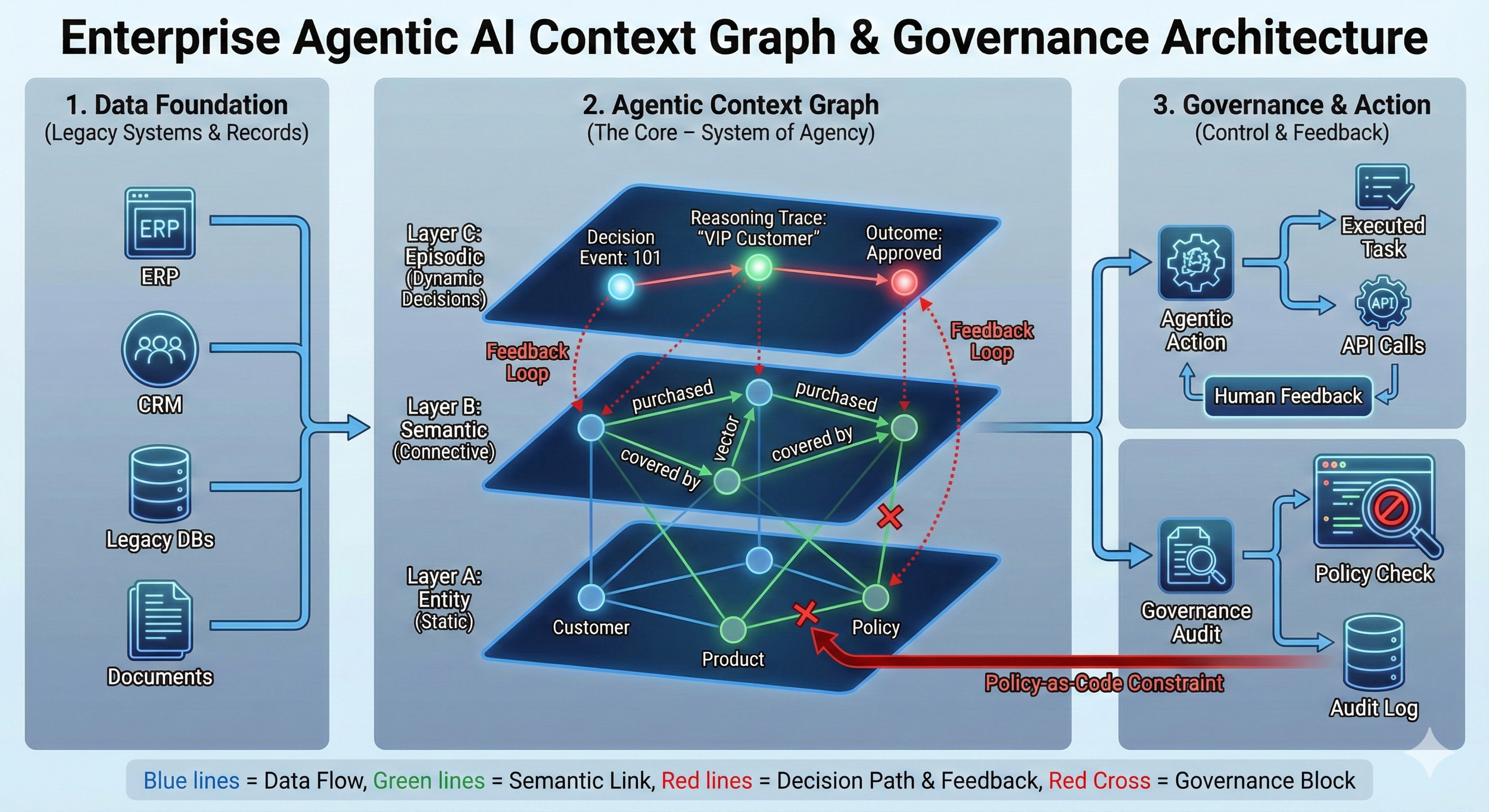

To deploy safe autonomous agents at scale, enterprises need a new data layer, a Context Graph that acts as a "System of Agency." This layer captures decisions, context, and governance in real-time, creating an auditable record of not just what happened, but why it happened and what constraints shaped those choices.

The "Amnesia" Problem in Modern AI

Current LLM-based agents possess remarkable capabilities. They can analyze complex documents, write sophisticated code, and orchestrate multi-step workflows. But they suffer from a critical flaw: they are forgetful.

Once an agent completes a task, the reasoning that led to its decisions evaporates. The agent moves on to the next task with no memory of how or why it made previous choices. This creates what we call the "amnesia problem."

The Black Box Risk

When an agent takes an action; approving a loan, authorizing a refund, deploying code to production; and we only log the result (loan approved, refund processed, code deployed), we lose the audit trail of why it happened.

This creates serious risks:

Compliance failures: Regulators don't just want to know what happened. They want to understand the decision-making process. Without captured reasoning, you cannot demonstrate that your automated systems followed appropriate protocols.

Undetectable bias: If an agent consistently makes questionable decisions but you only see the outcomes, you cannot identify the flawed reasoning pattern causing the problem.

Inability to improve: When an agent makes a mistake, you need to understand the chain of thought that led to the error. Without this, you're forced to guess at fixes rather than targeting the actual problem.

Lost institutional knowledge: Every decision an agent makes contains valuable information about how your business operates under specific conditions. Without capturing this, you're throwing away data that could train better future agents.

The solution requires moving beyond logging outputs to capturing "Decision Data”; complete episodes of agent behavior that preserve the reasoning, context, and constraints active at the moment of decision.

The Solution: The Agentic Context Graph

To solve the amnesia problem, we must move beyond flat databases and unstructured vector stores. The solution is a Temporal Knowledge Graph; a living structure that acts as the "System of Agency."

This architecture does not replace your existing data infrastructure. Instead, it layers meaning and history on top of it. Think of it as a three-tiered stack where each layer builds upon the previous one.

Layer A: The Entity Layer (The "Nouns")

This is your foundation, the organization's existing "System of Record." It consists of the structured data living in SQL databases, ERPs like SAP or Oracle, and CRMs like Salesforce.

What it holds: Static facts. Customer: Acme Corp, Product: Widget X, Inventory: 500 units.

The limitation: This layer tells you the state of the world, but stays silent on how or why it reached that state. It's a snapshot, not a movie. You see where things are, but not how they got there or where they're going.

This layer is necessary but insufficient for agentic systems. An agent needs more than current state; it needs context, relationships, and history.

Layer B: The Semantic Layer (The "Meaning")

Sitting above the entities is the connective tissue, powered by vector databases and ontologies. This layer maps the hidden relationships between isolated data points.

What it holds: Contextual links. It understands that Widget X is a type of Industrial Component, and that Acme Corp is a subsidiary of Global Industries.

The role: This layer allows an agent to reason across organizational silos. If an agent encounters a policy applicable to Global Industries, the semantic layer indicates that the policy likely applies to Acme Corp as well.

The semantic layer turns your data from isolated facts into a web of meaning. It's what enables an agent to understand that a "rush order" from a "VIP customer" in your "primary market" should be handled differently than a standard order, even if those designations live in separate systems.

Layer C: The Episodic Layer (The "Verbs" & "Decisions")

This is the critical missing piece. The Episodic Layer captures the dynamic history of agent activity. It treats "Decisions" and "Reasoning" as first-class citizens in the data graph, not just ephemeral logs destined for deletion.

What it holds: The agent's "Chain of Thought."

Nodes: Event: Risk_Assessment_101, Reasoning: "Credit score is borderline, but cash flow is strong", Decision: Approved.

Edges: Decision: Approved was constrained by Policy: Risk_Threshold_V2, Decision: Approved was made by Agent: Credit_Reviewer_3, Decision: Approved modified Customer: Acme Corp.

The power: By graph-linking a decision back to the specific policy that authorized it, you create traceability. You can query the graph to ask, "Show me every decision made by an agent that relied on the 'Emergency Override' policy last month."

Even more valuable, you can trace backwards from an outcome to understand the full decision chain. If a customer complains about a charge, you can visualize the exact path the agent took: which data it consulted, which policies it checked, which alternatives it considered, and why it chose the action it did.

By integrating these three layers, you transform your data from a static warehouse into a navigable map of intent. Agents can learn from the past rather than just repeating it. More critically, you can audit, explain, and improve agent behavior in ways impossible with traditional logging.

Created using Google Nano Banana

IV. Governance is Not a Gatekeeper, It's the Map

Traditional governance often acts as a bottleneck. A human must stop the process to review a document, approve a request, or audit a log. In an agentic world, this manual friction destroys the very speed and autonomy we are trying to achieve.

The solution is to move governance out of the "review queue" and into the topology of the graph itself. When you treat governance as "Policy-as-Code," you are not just policing the agent, you are shaping the reality it operates in.

Governance as Topology (The "Fog of War")

In the Context Graph, policies are not text documents sitting in a PDF. They are constraints on the edges between nodes.

Think of it like GPS navigation. If a road is closed, the GPS simply doesn't offer it as a route. You don't need a pop-up warning saying "Road Closed”; the road just doesn't appear in your available options.

The mechanism: An agent uses a pathfinding algorithm (similar to A* or Dijkstra) to figure out how to get from Task: Delete User Data to Goal: Compliance Complete.

The constraint: If the graph creates a mandatory path requirement; for example, "You cannot traverse to Action: Delete without passing through Node: Legal_Approval_Token”; the agent physically cannot "see" a valid path to execute the action.

The result: You don't need a human watching the agent every second. The agent is structurally incapable of breaking the rule because no valid pathway exists in its world model.

This is governance by design rather than governance by enforcement. The agent isn't choosing to follow the rules; it's operating in an environment where the rules define the only possible paths forward.

Scenario: The Unauthorized Transfer

Consider an agent tasked with resolving a billing dispute.

Without Graph Governance: The agent might hallucinate that it has authority, call the Stripe API, and refund $10,000. You discover this a week later during an audit.

With Graph Governance: The agent attempts to map a path to the API: Refund Execute node. The graph topology detects that the amount (10,000) exceeds_the_policy : Auto_approval_limit (500).

The edge connecting the agent to the API is dynamically severed.

A new edge appears: Path: Request_Manager_Approval.

The agent effectively hits a roadblock and is rerouted to the only available path: asking a human for help.

The agent doesn't make a choice to escalate. The graph structure makes escalation the only viable route forward. The governance isn't enforced through monitoring; it's enforced through topology.

Forensic Explainability: The "Why" Audit

When things do go wrong, the Context Graph changes the nature of the investigation. In traditional systems, you examine what happened (the log). In an agentic system, you examine why.

Because every decision is a node linked to a policy, an auditor can run a simple graph query:

"Show me the path between Decision: Release_Code_to_Prod and Policy: Security_Check."

If the graph shows a direct line that bypassed the Test_Suite_Pass node, you don't just know the agent failed; you know exactly which governance constraint was missing or broken. You can see whether:

The policy node existed but wasn't properly linked to the decision pathway

The agent found an alternative route that shouldn't have been available

The weighting on the security check was too low, allowing the agent to deprioritize it

A human override was used, and if so, who authorized it

This turns compliance from a guessing game into a mathematical certainty. You're not reconstructing events from sparse logs; you're walking the exact path the agent took, seeing every choice point and every constraint that shaped those choices.

V. The Flywheel: Reinforcement Learning from Governance (RLFG)

The most powerful aspect of the Context Graph approach is that governance and learning become the same activity.

Closing the Loop

When a human corrects an agent's decision, that correction isn't just a "fix”; it becomes data that reshapes the graph itself.

The mechanism: A compliance officer reviews an agent's decision to approve a high-risk transaction. They flag it as inappropriate, even though it technically followed the policy. This flag effectively "down-weights" that specific path in the graph, increasing its cost.

The result: Future agents "sense" the resistance on that path and avoid making the same mistake. They can still traverse it if no better option exists, but the added cost means they'll naturally explore alternative routes first.

The Learning Dynamic

This creates a learning flywheel:

Agents generate decision data as they work, creating new nodes and edges in the graph

Humans provide feedback on agent decisions, adjusting weights and adding constraints

The graph topology evolves, encoding institutional knowledge about what constitutes good judgment

Future agents inherit this knowledge, making better decisions without explicit retraining

Unlike traditional machine learning, which requires collecting datasets and retraining models, RLFG happens continuously. Every piece of feedback immediately influences the next agent that needs to navigate a similar decision.

This is closer to how human organizations learn. When a manager corrects a junior employee's decision, that correction doesn't just fix one mistake; it teaches the junior employee better judgment. The Context Graph does the same thing for your digital workforce.

Over time, your governance policy evolves from a static document that no one reads into a living dataset that actively trains your agents. The graph becomes a map of not just what your organization does, but how it thinks.

VI. Conclusion: Building Your "System of Agency"

Data quality in the agentic era is no longer just about clean rows and columns. It's about clean decision history.

Your existing Systems of Record tell you where you are. The Context Graph tells you how you got there and guides where you should go next. This layer, the System of Agency, is what separates organizations that merely experiment with AI agents from those that deploy them safely at scale.

The architecture outlined here is not theoretical. The components exist today:

Graph databases like Neo4j and Amazon Neptune provide the storage layer

Vector databases like Pinecone and Weaviate enable the semantic layer

Agent frameworks like LangGraph and CrewAI can integrate with graph-based memory systems

Policy engines can encode governance rules as graph constraints

What's missing is not the technology; it's the strategic decision to treat decision data as a first-class asset, as important as your customer data or financial records.

Start by capturing Decision Data today. Before you deploy your next autonomous agent, ask: How will we know why it made each choice? What will the audit trail look like? How will we incorporate human feedback to improve future decisions?

The organizations that answer these questions now, that build their System of Agency before they scale their agent deployments, will be the ones that successfully navigate the transition from AI experiments to AI-driven operations.

Don't just build agents. Build the memory systems they need to operate safely, learn continuously, and earn the trust required for true autonomy.