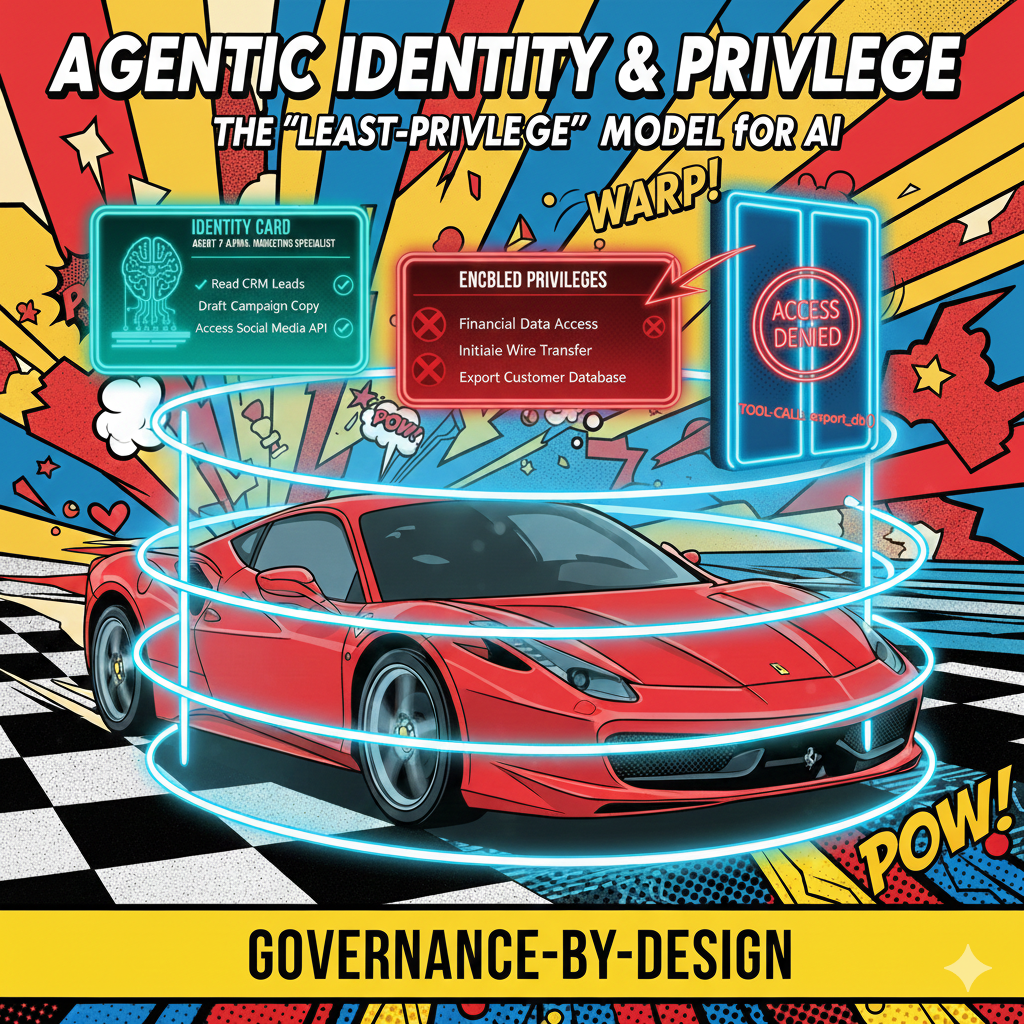

Agentic Identity and Privilege: Why Your AI Needs an Employee ID and a Security Clearance

In most current AI deployments, "The AI" is a monolithic entity with a single API key. If it hallucinates a reason to access your payroll database, there is no "Internal Affairs" to stop it. We treat AI as a tool with a single identity, a single set of permissions, and a single point of failure. But here is the uncomfortable truth: your AI systems need to operate more like employees than instruments. The gap between how we currently deploy AI and how we should deploy AI is a chasm of organizational risk.

The Semantic Interceptor: Controlling Intent, Not Just Words

Traditional keyword filters operate on tokens that have already been generated. An agent produces toxic output, the filter catches it, but the model has already burned compute cycles and corrupted the system state. The moment is lost. The user has seen something problematic, or the downstream process has absorbed bad data.

From "Filters" to "Foundations": Why the Post-Hoc Guardrail Is Failing the Agentic Era

Most enterprises govern AI like catching smoke with a net. They wait for a hallucination, a misaligned response, or a brand violation, then they write a new rule. They audit the logs after the damage is done. They implement a keyword filter. They add a content policy. But they have never asked the question that matters: at what point in the process should the guardrail actually kick in?

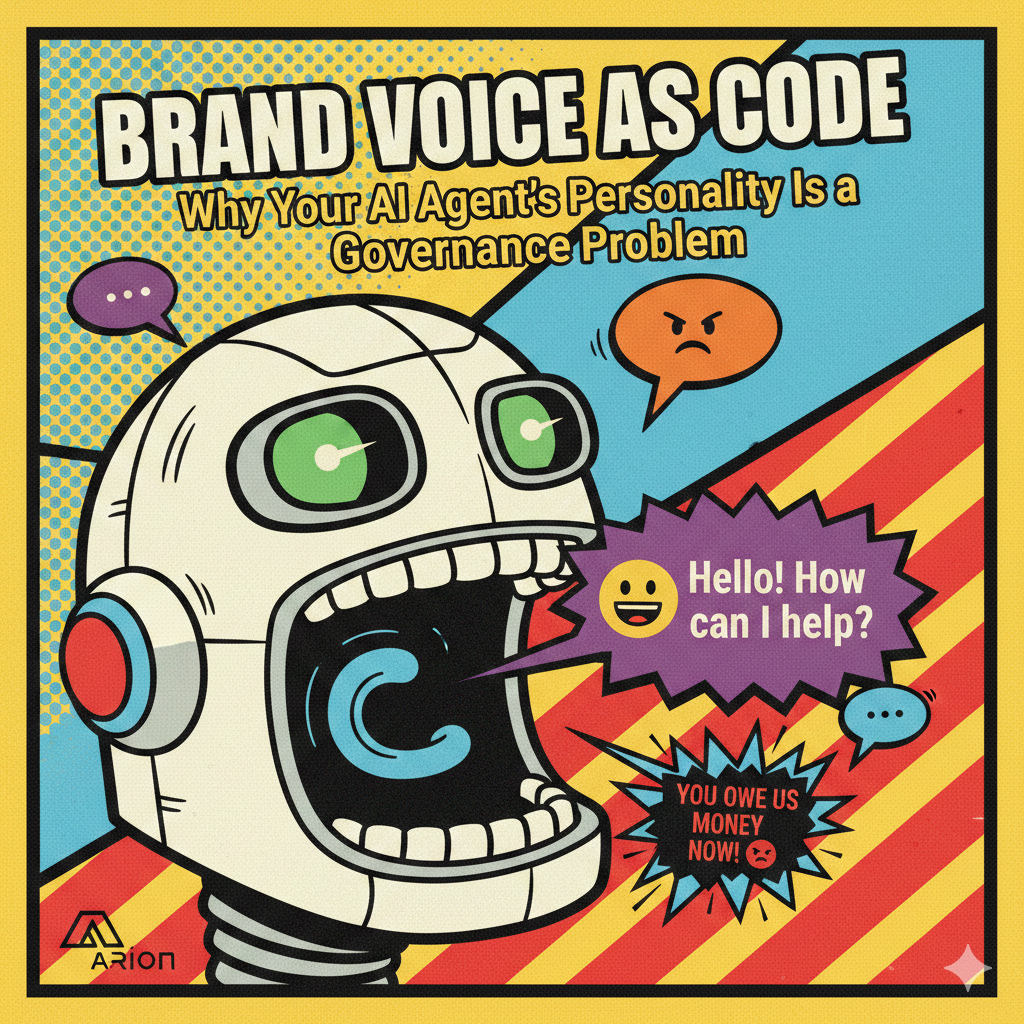

Brand Voice as Code: Why Your AI Agent's Personality Is a Governance Problem

The new frontier of enterprise risk. The biggest threat to your brand is no longer a data breach or a rogue employee on social media. It’s an AI agent that is technically correct but emotionally illiterate, one that follows every rule in the compliance handbook while violating every unwritten norm your brand has spent decades cultivating. The conversation around AI governance has focused almost entirely on data security, model accuracy, and regulatory compliance. Those concerns are real and important. But they miss a critical dimension: personality. How your AI agent speaks, empathizes, calibrates tone, and navigates cultural nuance is not a "nice to have" layered on top of governance. It is governance.

The "Agent Orchestrator": The New Middle Manager Role of 2026

The dominant narrative around AI in the enterprise has been one of subtraction: fewer headcounts, leaner teams, entire departments rendered obsolete. It makes for compelling headlines, but it misses the point. The real story unfolding in 2026 is far more interesting than simple displacement. It is a story of structural evolution, of org charts being redrawn not because roles are vanishing, but because entirely new ones are emerging to meet demands that didn't exist two years ago.

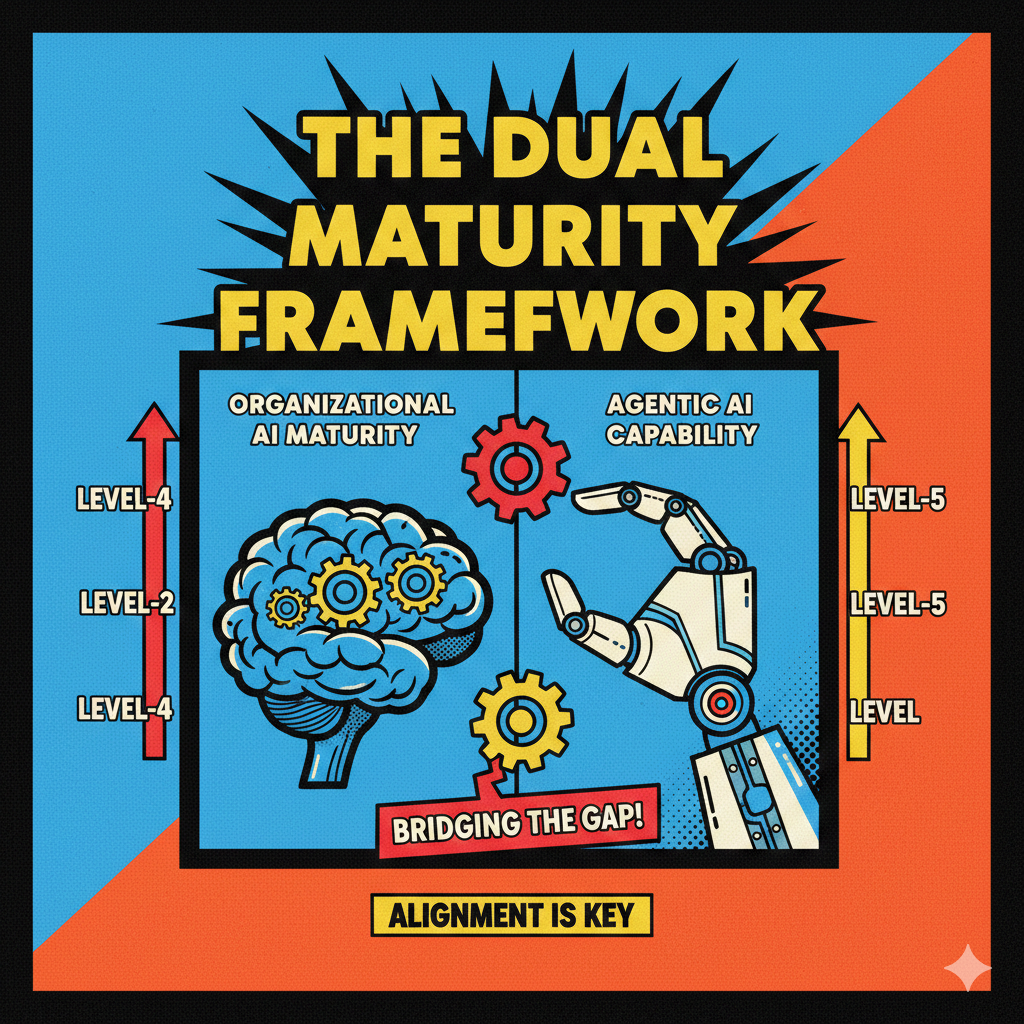

The Dual Maturity Framework: Bridging the Gap Between Organizational Readiness and AI Autonomy

The conversation around enterprise AI has shifted. For several years, the focus was on generative AI: systems that could summarize documents, draft emails, write code, and answer questions when prompted. These tools delivered real value, but they shared a common limitation. They waited for a human to ask before they did anything. The emerging generation of agentic AI changes that equation entirely. Agentic systems do not just answer; they execute. They plan multi-step workflows, make decisions within defined parameters, coordinate with other systems, and carry out complex tasks with minimal or no human intervention.

The Agentic Service Bus: A New Architecture for Inter-Agent Communication

As enterprises deploy more AI agents across their operations, a critical infrastructure challenge is emerging: how should these agents communicate with each other? The answer may reshape enterprise architecture as profoundly as the original service bus did two decades ago.

The Death of the "Generalist" Dashboard: Why 2026 Belongs to Vertical Agentic Workflows

We are witnessing a pivot in enterprise computing that will reshape how organizations operate. The application layer, as we've known it, is evaporating. We are moving from a world where humans log in to work, to a world where agents log out to execute. The dashboard is no longer a destination. It is a legacy artifact.

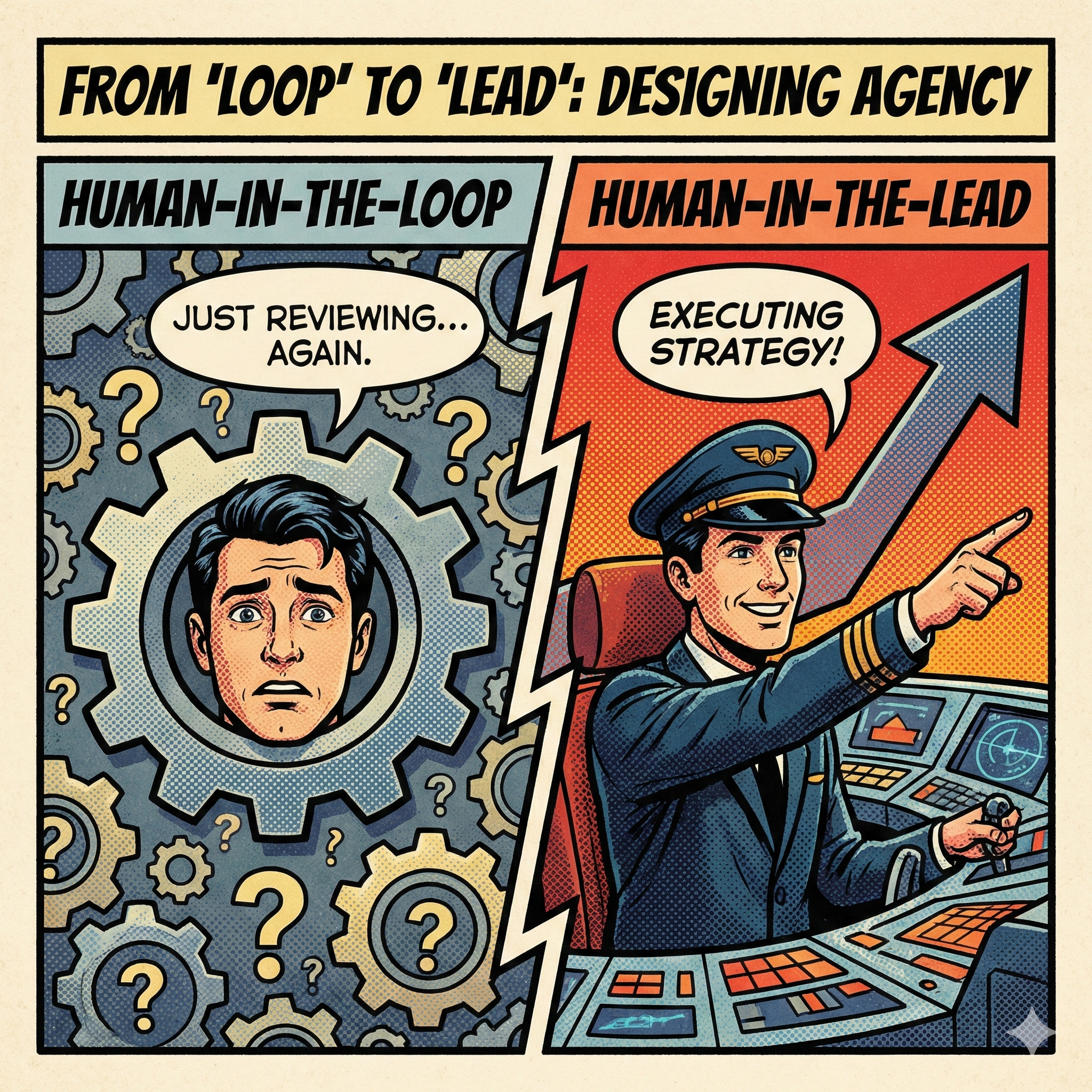

From "Human-in-the-Loop" to "Human-in-the-Lead": Designing Agency for Trust, Not Just Automation

If we want to scale agentic AI, we need a different model. We must stop treating humans as safety nets reacting to AI outputs and start treating them as pilots directing AI capabilities. This is the shift from "Human-in-the-Loop" to "Human-in-the-Lead."

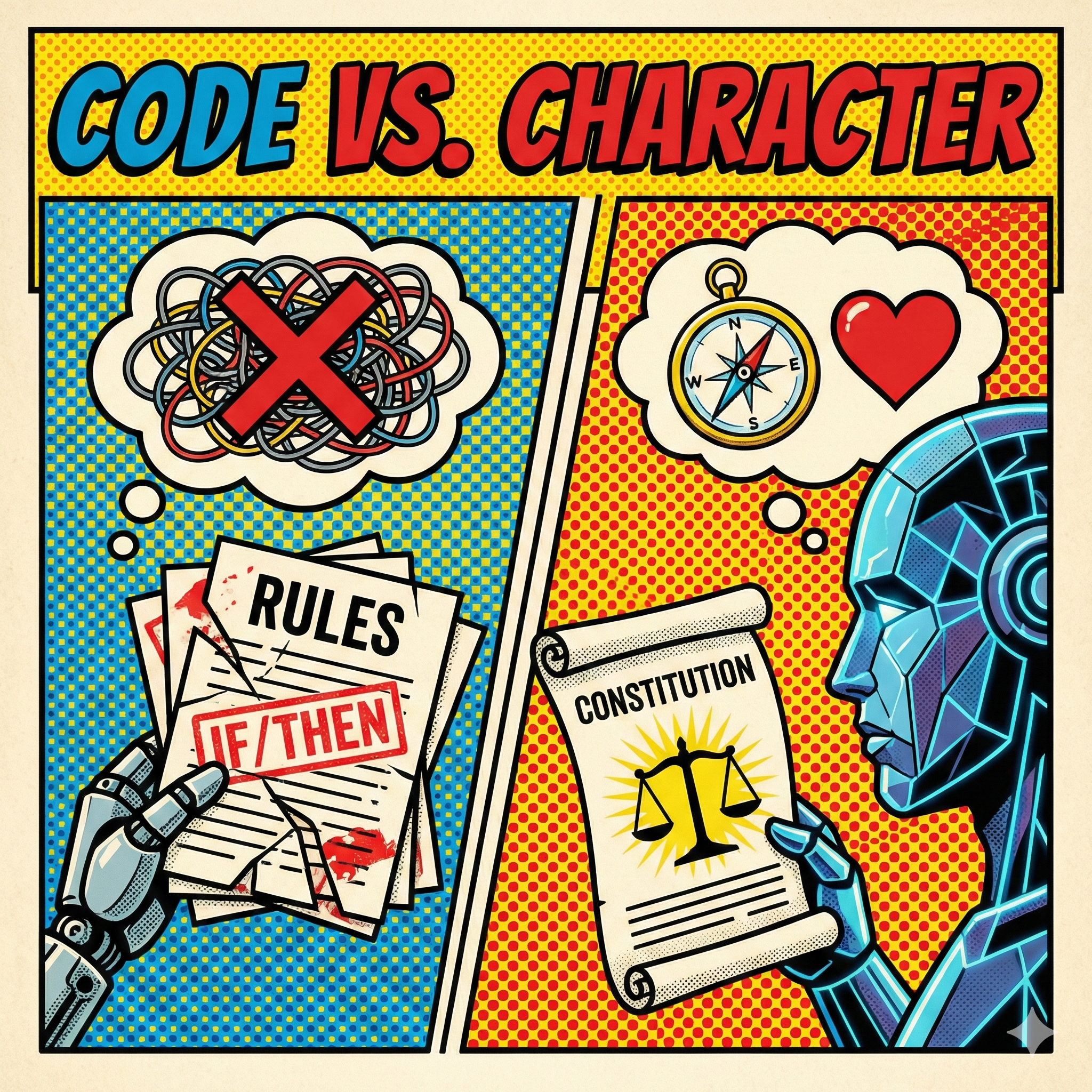

Code vs. Character: How Anthropic's Constitution Teaches Claude to "Think" Ethically

The challenge of AI safety often feels like playing Whac-A-Mole. A language model says something offensive, so engineers add a rule against it. Then it finds a workaround. So they add another rule. And another. Soon you have thousands of specific prohibitions. This approach treats AI safety like debugging software. Anthropic has taken a different path with Claude. Instead of programming an ever-expanding checklist of "dos and don'ts," they've given their AI something closer to a moral framework: a Constitution.

Is Your Organization Ready for Agentic AI? Take This Free Assessment to Find Out

Most executives today face the same challenge: they know agentic AI will transform how work gets done, but they don't know if their organization is ready to make the leap from experimentation to production deployment.

The gap between running a successful pilot and deploying autonomous agents at scale is larger than most leaders realize. It's not just about having good data or smart developers. Organizations that successfully deploy agentic AI have built readiness across six critical dimensions, from technical infrastructure to governance frameworks to team capabilities.

Beyond Trial and Error: How Internal RL is Redefining AI Agency

Generally, artificial intelligence agents have learned the same way toddlers do: by taking actions, observing what happens, and gradually improving through countless iterations. A robot learning to grasp objects drops them hundreds of times. An AI learning to play chess loses thousands of games. This external trial-and-error approach has produced remarkable results, but it comes with a cost. Every mistake requires real-world interaction, whether that's computational resources, physical wear on hardware, or in some cases, actual safety risks.

Depth Over Breadth: Why General AI is Stalling and Vertical AI is Booming

The "Generalist Era" of AI (ChatGPT, generic copilots) is ending. 2025 marks the pivot to the "Specialist Era" (Vertical AI), where value is captured not by broad knowledge, but by deep, domain-specific execution. The $3.5 billion spending figure is the canary in the coal mine; signaling a massive capital flight toward tools that solve expensive, specific problems rather than general ones.

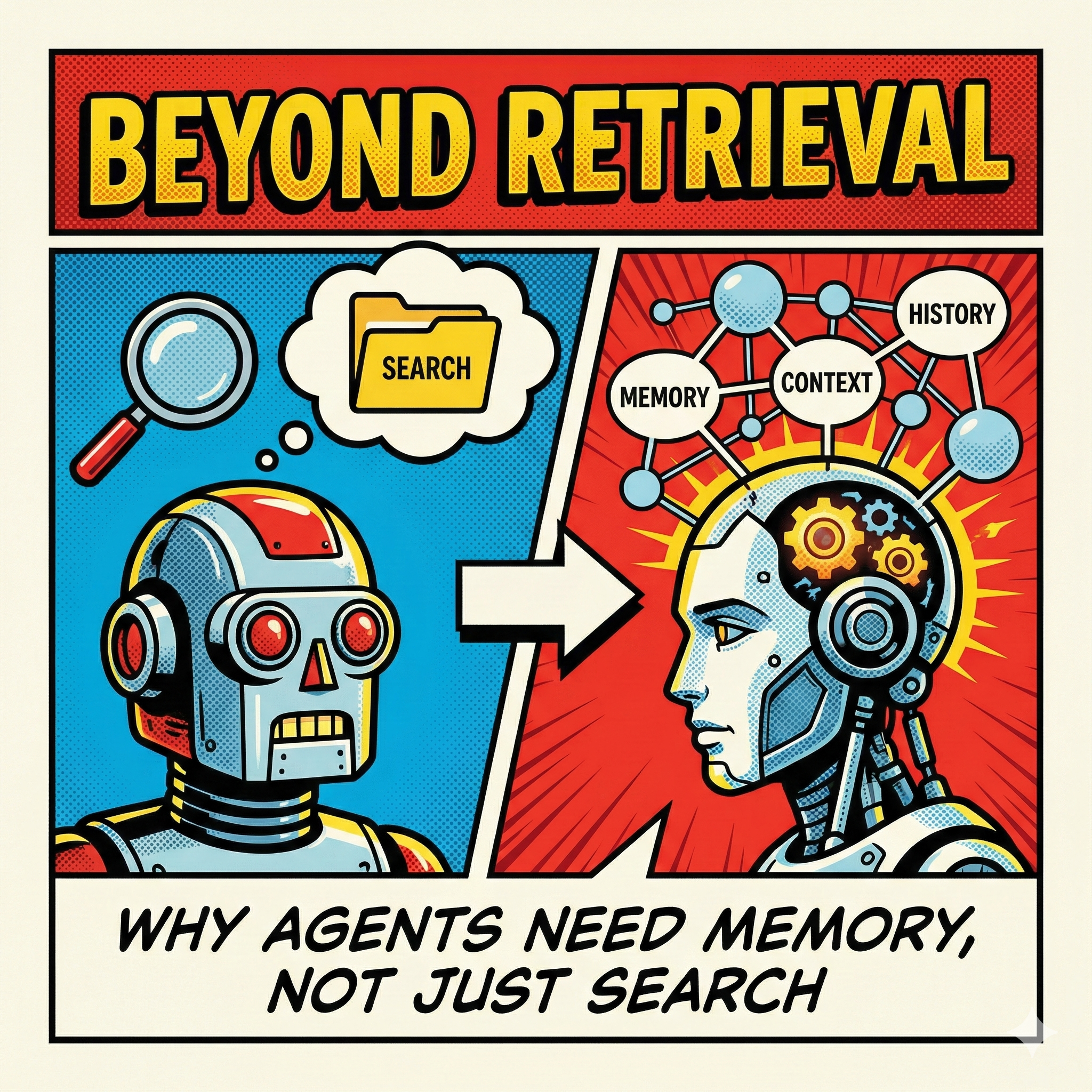

Beyond Retrieval: Why Agents Need Memory, Not Just Search

If you're building AI agents right now, you've probably noticed something frustrating. Your agent handles a complex task brilliantly, then five minutes later makes the exact same mistake it just recovered from. It's like working with someone who has no short-term memory.

This isn't a bug in your implementation. It's a design limitation. Most organizations are using Retrieval-Augmented Generation (RAG) to power their agents. RAG works great for what it was designed to do: answer questions by finding relevant documents. But agents don't just answer questions. They take action, encounter obstacles, adapt their approach, and learn from failure. That requires a different kind of intelligence.

The Missing Layer: Why Enterprise Agents Need a "System of Agency"

We are witnessing a critical transition in artificial intelligence. The move from Generative AI (which creates content) to Agentic AI (which executes tasks) changes everything about how organizations must approach their AI infrastructure.

Most organizations are attempting to build autonomous agents on top of their existing "Systems of Record”; ERPs, CRMs, and legacy databases designed decades ago. These systems excel at storing state: inventory levels, customer records, transaction histories. But they were never designed to capture something equally critical: the reasoning behind decisions.

The State of Agentic AI in 2025: A Year-End Reality Check

After a full year of hype, deployment attempts, and reality checks, we can now see clearly what worked, what didn't, and what lessons matter for organizations making AI strategy decisions in 2026. This is a practical look at the technical breakthroughs that mattered, where enterprises actually deployed agents at scale, how multi-agent systems evolved from theory to practice, and the governance challenges that couldn't be ignored.

Enterprise AI Is a System, Not a Model

Many enterprise leaders are making a costly category error. They're confusing access to intelligence with operational AI.

The distinction matters because public chatbots and foundation models are optimized for one set of outcomes while enterprise AI requires something entirely different. ChatGPT, Claude, and Gemini excel at general reasoning, conversational fluency, and handling broad, non-contextual tasks. They're designed to answer questions, generate content, and provide insights across virtually any domain.

Enterprise AI operates in a different universe. It must execute inside real workflows, maintain accountability and governance at every step, and deliver repeatable business outcomes. The goal isn't to answer questions. It's to orchestrate work.

Conflict Resolution Playbook: How Agentic AI Systems Detect, Negotiate, and Resolve Disputes at Scale

When you deploy dozens or hundreds of AI agents across your organization, you're not just automating tasks. You're creating a digital workforce with its own internal politics, competing priorities, and inevitable disputes. The question isn't whether your agents will come into conflict. The question is whether you've designed a system that can resolve those conflicts without grinding to a halt or escalating to human intervention every time.

Beyond Bottlenecks: Dynamic Governance for AI Systems

As we move from single Large Language Models to Multi-Agent Systems (MAS), we're discovering that intelligence alone doesn't scale. The real challenge is coordination, orchestration and governance. Imagine you've deployed 100 autonomous agents into your enterprise. One specializes in customer data analysis. Another handles inventory optimization. A third manages supplier communications. Each agent is competent at its job. But when a supply chain disruption hits, who decides which agents act first? When two agents need the same resource, who arbitrates? When market conditions shift, how do they reorganize without human intervention?

The Model Context Protocol: Understanding Its Limits and Planning Your Agent Stack

The Model Context Protocol (MCP) received significant fanfare as a standardized way for AI agents to access tools and external systems. Anthropic's launch generated enthusiasm in the AI community, particularly among developers building local and experimental agentic systems. But as organizations move from proof-of-concept to production deployments, MCP's limitations are becoming apparent.

This isn't a story about MCP "failing" or being replaced overnight. Rather, MCP is settling into its actual role: one integration pattern among many, useful in specific contexts but insufficient as the primary fabric for enterprise agentic systems. Understanding where MCP fits (and where it doesn't) is essential for anyone building production-grade agent infrastructure.