From Retrieval to Reasoning: Building Self-Correcting AI with Multi-Agent ReRAG

Imagine asking an AI system a complex question about your company's financial performance, only to receive an answer that sounds authoritative but contains subtle errors or misses critical context. This scenario plays out countless times across organizations using today's RAG (Retrieval-Augmented Generation) systems. While these systems excel at finding and synthesizing information, they often fall short when complex reasoning, multiple perspectives, or iterative refinement are needed.

RAG systems combine the power of large language models with external knowledge retrieval, allowing AI to ground responses in relevant documents and data. However, current implementations typically follow a simple pattern: retrieve once, generate once, and deliver the result. This approach works well for straightforward questions but struggles with nuanced reasoning tasks that require deeper analysis, cross-referencing multiple sources, or identifying potential inconsistencies.

Enter Multi-Agent Reflective RAG (ReRAG), a design that enhances traditional RAG with reflection capabilities and specialized agents working in concert. By incorporating self-evaluation, peer review, and iterative refinement, ReRAG systems can catch errors, improve reasoning quality, and provide more reliable outputs for complex queries.

In this post, we'll explore how ReRAG works, examine its multi-agent architecture, discuss practical applications, and consider the challenges and opportunities this approach brings to AI-powered information systems.

The Evolution of RAG Systems

Standard RAG: The Foundation

Traditional RAG systems follow a straightforward process: given a query, retrieve relevant documents, then generate a response using both the query and retrieved context. This approach provides significant benefits over pure language model generation by grounding responses in factual information and expanding the available context beyond the model's training data.

However, standard RAG has notable limitations. The retrieval process often captures documents based on surface-level similarity rather than deeper semantic relevance. The generation step happens in a single pass, providing no opportunity to reconsider or refine the response. When the initial retrieval misses key information or the reasoning contains flaws, the system has no mechanism for self-correction.

Chain-of-Thought RAG: Adding Structure

Chain-of-Thought RAG improved upon the basic approach by breaking down complex queries into sequential reasoning steps, with intermediate retrieval operations as needed. This method produces more transparent reasoning chains and can handle multi-step problems more effectively.

Chain-of-Thought RAG though, lacks a critical capability: the ability to step back, evaluate the quality of its reasoning, and make corrections. Real-world problem-solving often requires iteration, reconsideration, and refinement—processes that current RAG systems cannot perform.

The Need for Deeper Understanding

As organizations deploy RAG systems for increasingly complex tasks—from legal document analysis to technical troubleshooting—the limitations of single-pass reasoning become more apparent. We need systems that can evaluate their own outputs, identify potential issues, and engage in iterative improvement to produce more reliable and comprehensive responses.

What Is Reflective RAG (ReRAG)?

Reflective RAG enhances traditional RAG architectures with a crucial capability: reflection. This means the system can evaluate its own reasoning, identify potential weaknesses or gaps, and iterate to improve the quality of its output before presenting a final response.

The key innovation lies in the reflection loop. Instead of generating a response and immediately returning it, ReRAG systems engage in a process of self-evaluation and refinement. The system might ask itself: "Is this reasoning sound? Have I considered alternative perspectives? Are there contradictions in my sources that I need to address?"

This reflective capability transforms RAG from a one-shot generation process into an iterative reasoning system. Like a human researcher who reviews their work, considers counterarguments, and refines their conclusions, ReRAG systems can engage in multiple rounds of analysis before settling on a final answer.

The contrast is striking. While standard RAG follows a linear path from query to retrieval to generation, and Chain-of-Thought RAG adds sequential reasoning steps, ReRAG introduces cycles of evaluation and improvement that can significantly enhance output quality.

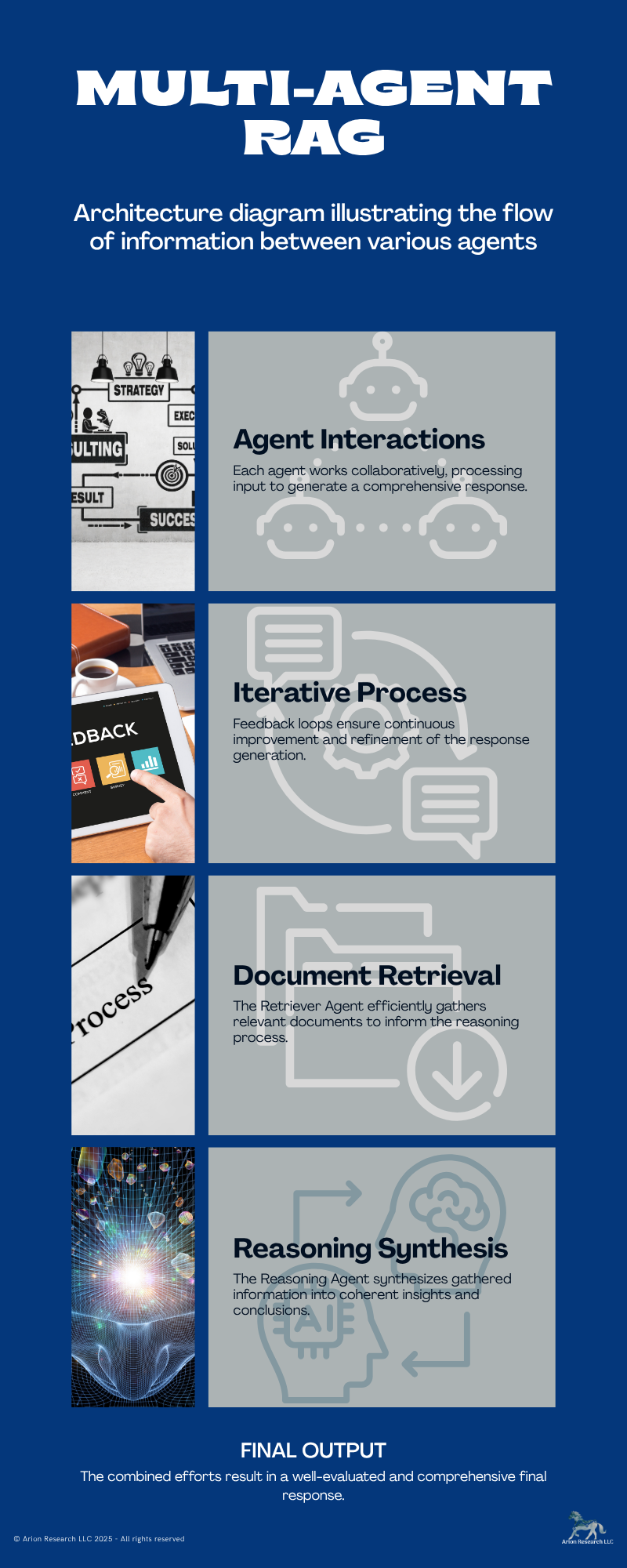

Introducing Multi-Agent ReRAG

The multi-agent approach takes ReRAG to the next level by distributing different aspects of the reasoning process across specialized agents, each optimized for specific tasks. This division of labor allows for more sophisticated processing and natural implementation of the reflection mechanism.

The Agent Architecture

Retriever Agent: This specialist focuses on gathering diverse, relevant documents from available knowledge sources. Unlike simple keyword matching, an advanced Retriever Agent understands query context, identifies implicit information needs, and can perform multiple retrieval passes with different strategies to ensure comprehensive coverage.

Reasoning Agent: The Reasoning Agent synthesizes information from multiple sources, builds logical connections, and constructs coherent responses. This agent specializes in information integration, logical inference, and clear communication of complex ideas.

Critic Agent: Perhaps the most innovative component, the Critic Agent evaluates the reasoning and outputs from other agents. It checks for logical consistency, identifies potential hallucinations, spots gaps in reasoning, and suggests improvements. The Critic acts as an internal quality control system.

Planner Agent (optional): In complex scenarios, a Planner Agent can coordinate the overall workflow, deciding when to retrieve additional information, when to engage in more reasoning, and when the reflection process has reached a satisfactory conclusion.

The Reflection Mechanism in Action

The magic of Multi-Agent ReRAG happens in the interactions between agents. After the Reasoning Agent produces an initial response, the Critic Agent evaluates it for quality, consistency, and completeness. If issues are identified, the Critic provides specific feedback, which can trigger additional retrieval by the Retriever Agent or revised reasoning by the Reasoning Agent.

This creates a natural feedback loop where agents engage in both self-critique and peer review. The system iterates through multiple cycles of improvement before producing a final output, much like a research team reviewing and refining their work collaboratively.

Architecture Overview

The typical flow follows this pattern:

1. Initial Query Processing: The Planner Agent analyzes the incoming query and determines the appropriate strategy

2. Document Retrieval: The Retriever Agent gathers relevant information using various retrieval strategies

3. Initial Reasoning: The Reasoning Agent synthesizes the information into a preliminary response

4. Critical Evaluation: The Critic Agent evaluates the response for accuracy, completeness, and logical consistency

5. Feedback Loop: If issues are identified, specific feedback is provided to trigger additional retrieval or refined reasoning

6. Iteration: Steps 2-5 repeat until the Critic Agent determines the response meets quality standards

7. Final Response: The refined, validated response is delivered to the user

Key Advantages of Multi-Agent ReRAG

Improved Accuracy Through Reflection

The most significant advantage of Multi-Agent ReRAG is its ability to catch and correct errors that would slip through traditional RAG systems. The Critic Agent serves as a quality gate, identifying potential hallucinations, logical inconsistencies, or incomplete reasoning before the response reaches the user. This results in more accurate and reliable outputs, especially for complex queries.

Deeper Reasoning Capabilities

The multi-stage synthesis process enables more sophisticated reasoning than single-pass systems. By allowing multiple rounds of analysis and refinement, ReRAG can tackle complex problems that require considering multiple angles, weighing trade-offs, or building upon intermediate conclusions.

Enhanced Transparency

The Critic Agent's evaluations can be surfaced to users, providing insight into the system's reasoning process and the specific improvements made during reflection. This transparency helps users understand how conclusions were reached and builds trust in the system's outputs.

Modularity and Specialization

The agent-based architecture offers significant advantages for system development and maintenance. Each agent can be optimized independently, allowing teams to improve retrieval algorithms, reasoning capabilities, or critical evaluation separately. This modularity also makes it easier to adapt the system for different domains or use cases.

Use Cases and Applications

Enterprise Search and Knowledge Management

Multi-Agent ReRAG excels in enterprise environments where employees need to find and synthesize information across large, complex document repositories. The system can analyze lengthy technical specifications, corporate policies, or research reports, with the Critic Agent ensuring that summaries accurately capture key points and don't miss critical details.

Technical Support and Documentation

Customer support scenarios benefit greatly from ReRAG's ability to understand complex technical queries and cross-reference multiple documentation sources. The reflection process helps ensure that solutions are complete and that potential complications or alternative approaches are considered.

Legal and Medical Analysis

High-stakes domains like legal research and medical consultation require exceptional accuracy and thorough analysis. Multi-Agent ReRAG's ability to consider multiple sources, identify potential contradictions, and provide reasoned analysis makes it valuable for these applications, though human oversight remains essential.

Research and Decision Support

Organizations making complex decisions can leverage ReRAG systems to analyze market data, research findings, and strategic documents. The multi-agent approach ensures that different perspectives are considered and that the analysis is thorough and well-reasoned.

Challenges and Considerations

Computational Overhead

The iterative nature of Multi-Agent ReRAG comes with increased computational costs. Multiple rounds of retrieval, reasoning, and evaluation require more processing time and resources than traditional RAG systems. Organizations must weigh these costs against the improved output quality when deciding whether to implement ReRAG.

Orchestration Complexity

Coordinating multiple agents and managing the reflection loop introduces significant design challenges. Systems must handle agent communication, decide when iteration is complete, and manage cases where agents disagree or the reflection process doesn't converge on a satisfactory solution.

Validation and Trust

While the Critic Agent improves output quality, it also introduces new questions about validation. How do we ensure the Critic's judgment is sound? What happens when the Critic incorrectly identifies issues or misses real problems? Building robust oversight mechanisms becomes crucial.

Agent Design and Tuning

Each agent requires careful prompt engineering and fine-tuning to perform its specialized role effectively. This adds complexity to system development and maintenance, requiring expertise in multi-agent system design and ongoing optimization efforts.

Future Directions

Persistent Memory and Learning

Future ReRAG systems could maintain memory across interactions, learning from past reflection cycles to improve future performance. Agents could build understanding of common error patterns and develop better strategies for specific types of queries.

Autonomous Improvement

Advanced systems might develop the ability to learn from their mistakes autonomously, analyzing cases where reflection led to improved outcomes and adjusting their processes accordingly. This could lead to self-improving ReRAG systems that get better over time.

Integration with Planning Frameworks

The rise of agent orchestration frameworks like LangGraph, CrewAI, and OpenAgents provides new opportunities for implementing sophisticated Multi-Agent ReRAG systems. These platforms could simplify the coordination challenges and provide standardized approaches to agent communication.

Benchmarking and Evaluation

To continue to evolve ReRAG systems there’s a high priority need for better metrics to evaluate ReRAG systems that go beyond simple factual accuracy. New benchmarks should assess reasoning quality, consistency, and the effectiveness of the reflection process itself.

Conclusion

Multi-Agent Reflective RAG advances retrieval-augmented systems by introducing the critical capability of self-reflection and iterative improvement. By distributing reasoning tasks across specialized agents and implementing feedback loops, ReRAG systems can achieve higher accuracy, deeper reasoning, and greater reliability than traditional approaches.

The architecture offers compelling advantages for complex reasoning tasks, from enterprise knowledge management to technical analysis. However, implementing these systems requires careful consideration of computational costs, coordination complexity, and validation challenges.

As advanced retrieval systems continue to evolve, we encourage practitioners to experiment with reflective agents in their industries and functions. Start with simple reflection mechanisms, gradually introduce specialized agents, and build experience with multi-agent coordination. The insights gained from these experiments will help shape the next generation of intelligent information systems.

Looking ahead, Multi-Agent ReRAG will likely play an important role in the development of more sophisticated AI systems. As we move toward increasingly autonomous and capable AI agents, the ability to reflect, self-correct, and iterate will become ever more important. ReRAG offers a glimpse into this future, where AI systems not only retrieve and generate information but also engage in the kind of critical thinking and refinement that characterizes human expertise.