The Model Context Protocol: Understanding Its Limits and Planning Your Agent Stack

The Model Context Protocol (MCP) received significant fanfare as a standardized way for AI agents to access tools and external systems. Anthropic's launch generated enthusiasm in the AI community, particularly among developers building local and experimental agentic systems. But as organizations move from proof-of-concept to production deployments, MCP's limitations are becoming apparent.

This isn't a story about MCP "failing" or being replaced overnight. Rather, MCP is settling into its actual role: one integration pattern among many, useful in specific contexts but insufficient as the primary fabric for enterprise agentic systems. Understanding where MCP fits (and where it doesn't) is essential for anyone building production-grade agent infrastructure.

The Core Problems

Security Remains an Afterthought

MCP's biggest liability is security. The protocol leaves authentication, authorization, and token handling largely to implementers, which has predictably led to problematic patterns in real-world deployments. Shared admin tokens, missing per-user scoping, and the absence of built-in SSO, audit logging, or fine-grained permissions are common across MCP server implementations.

Independent security reviews have found high rates of command injection vulnerabilities and other attack vectors, particularly in servers exposed remotely or pulled from public registries. For organizations with compliance requirements or handling sensitive customer data, this creates unacceptable risk.

No Trust Infrastructure

There's no standard way to discover, vet, or version MCP servers and tools. Organizations are building ad-hoc registries, manually pinning versions, or pulling from open catalogs with limited provenance controls. This creates governance headaches and makes it easy for a compromised tool to propagate across multiple agents and environments.

The lack of a trust model means every organization is solving the same problems independently, reinventing wheels that mature API ecosystems solved years ago.

Performance and Transport Constraints

MCP assumes HTTP with JSON-like payloads and pushes large, unstructured text to models. This becomes a performance and cost bottleneck when agents make frequent tool calls or stream large resources into finite context windows. The JSON-RPC over HTTP layer is simply too heavy for high-frequency, low-latency tool usage compared with binary RPC or more compact protocols.

Because MCP is model-agnostic and text-centric, the LLM must infer tool semantics and interpret raw responses. This exacerbates hallucinations and makes robust, typed behavior harder to achieve. Poorly specified tools and inconsistent schemas across community servers magnify these problems, leading to incorrect tool selection, extra calls, and brittle workflows.

The Interoperability Gap

Many "MCP servers" are thin wrappers over existing APIs, often exposing a reduced capability surface and offering little beyond what a well-documented REST integration already provides. Tool vendors are also ambivalent about being commoditized as generic MCP tools, which has slowed first-party adoption.

The developer experience and operational tooling remain immature. Testing MCP tools at scale is difficult: error messages are opaque, standardized telemetry is limited, and debugging cross-server workflows can be time-consuming. Teams end up reinventing policy, monitoring, and rollout mechanisms that mature API gateways already provide.

The Emerging Architecture

Rather than being "replaced," MCP is being absorbed into more opinionated orchestration layers. The pattern that's emerging treats MCP as plumbing under higher-level agent frameworks, not as the dominant integration fabric.

Agent Frameworks Take the Lead

LangChain/LangGraph, CrewAI, and similar tool-orchestration stacks increasingly treat MCP as one of several backends rather than the core abstraction. These frameworks add:

- Typed tool definitions, routing logic, and multi-agent planning on top of any tool interface (MCP, REST, direct function calling)

- Built-in observability, auth hooks, and governance that address MCP's practical gaps without waiting for the spec to evolve

In this model, developers work with an agent server or graph framework that can speak MCP, but also native APIs, OpenAPI-generated tools, and custom RPC. Policy and risk controls live above the protocol layer.

Alternative Protocols Fill Gaps

New proposals like UTCP (Universal Tool Calling Protocol) aim to decouple tool calling from MCP's assumptions by using strongly typed, compiler-checked schemas and more constrained execution. These designs emphasize stricter validation, better sandboxing, and easier migration between cloud and local LLMs.

Some teams are exploring binary transports like Cap'n Proto or gRPC to replace MCP's JSON-RPC layer, cutting message overhead and improving throughput while maintaining MCP-like abstractions at the edges.

Platform-Native Tool Layers

Cloud and SaaS platforms are starting to offer platform-native agent tool layers that can host MCP-style tools but wrap them in enterprise-grade auth, rate-limiting, and observability. In these environments, MCP becomes an optional compatibility layer while the primary contract is the platform's own typed tools, policies, and eventing model.

Practical Guidance: When to Use MCP

The right approach depends on your deployment context and risk profile.

Use MCP when you want:

- Rapid integration and sharing of tools across clients (Jan, Claude Desktop, VS Code extensions) and frameworks where MCP is already supported

- Small, modular tool servers (weather, calendaring, CRM querying) that benefit from a microservice-like model and can be safely wrapped behind an internal MCP server, possibly fronted by an API gateway

- Quick prototyping and experimentation in local or single-user environments

Avoid MCP as the primary abstraction when you need:

- Minimal latency and don't want an extra hop and wrapper server (direct function calling on top of your existing API is better)

- Strict security posture with per-user auth, strong audit trails, and least privilege (let your API gateway or service mesh own security and expose tools via UTCP/OpenAPI, using MCP only for compatibility at the edges if needed)

- High-frequency tool calling or streaming large resources where the JSON-RPC overhead becomes a bottleneck

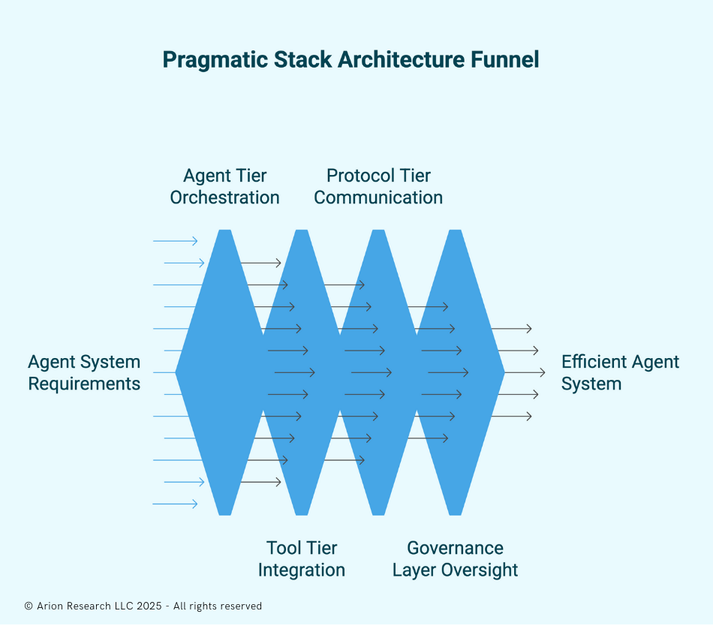

A Pragmatic Stack Architecture

Here's how this plays out in practice for a production agent system that needs RAG, web access, and CRM/research tools:

Agent Tier: Use LangGraph or similar to define your reasoning graph, multi-agent coordination, and memory/RAG. This tier exposes a single endpoint to the outside world and acts as your orchestration layer.

Tool Tier:

- Core business systems (CRM, email, calendar, newsletter platform) exposed as native HTTP APIs behind your API gateway, described via OpenAPI, and registered as tools through UTCP manifests or framework tool definitions

- Selected "commodity" tools (browser automation, generic utilities) kept as MCP servers for reuse with Jan and other MCP-aware clients

Protocol Tier:

- Inside your agent application, prefer direct function tools, OpenAPI tools, or UTCP-style descriptors for low-latency, strongly typed internal calls

- Expose the whole agent as an MCP endpoint so Jan or other MCP-capable front ends can use your agent as just another MCP tool, without knowing about your internal protocols

Governance Layer: Put an API gateway or service mesh in front of your internal tools, and treat MCP servers as clients of that gateway rather than peers. Use your agent server's built-in telemetry to track tool usage, failure modes, and costs.

The Bottom Line

A practical 2025 agent stack treats MCP as one integration option sitting under an agent server or graph layer, with UTCP and plain APIs filling in where MCP is too heavy or risky, and your governance living above all three.

For local, exploratory, single-user workflows, MCP-first approaches make sense. For team or department agents with moderate risk, a mixed approach (LangGraph as the brain, combination of MCP and direct tools, gateway managing auth) works well. For enterprise-grade or regulated agents, the gateway plus UTCP/OpenAPI should be your canonical interface, with MCP only as a compatibility shim where absolutely needed.

The key insight is that MCP solved a real problem (standardized tool access for AI agents) but did so at the wrong layer of abstraction for production systems. As the ecosystem matures, MCP will remain useful for specific use cases while more sophisticated orchestration layers handle the heavy lifting of security, governance, and performance.

The question for your organization isn't whether to "adopt MCP" or not. It's where MCP fits in your broader agent infrastructure, and what you're putting above and around it to address the gaps the protocol leaves open.