Resilience, Security & Real-Time Collaboration: Building the Foundation for the Agentic Enterprise

“The real-time enterprise of the future will run on trust; not just compute.”

The Convergence of Resilience, Security, and Real-Time Collaboration

The modern enterprise no longer operates within tidy boundaries. Instead, it exists as a node in a dynamic digital ecosystem where agents (both human and AI) must collaborate across organizations, geographies, and technical platforms. This isn't the collaborative work environment of the past decade. We're entering an era where digital teammates work alongside human ones, where AI agents make autonomous decisions in milliseconds, and where the coordination fabric must span not just internal teams and organizational silos, but entire supply chains and partner networks.

As agentic AI and digital workforces emerge from research labs into production environments, real-time coordination has become mission-critical. But speed alone isn't enough. The systems we build must also be trustworthy, resilient to failure, and secure against sophisticated threats. A trading algorithm that executes in microseconds means nothing if it can be manipulated. An autonomous logistics network that reroutes shipments in real-time loses its value if a single point of failure brings down the entire system.

The challenge before us is clear: the next evolution of collaboration architectures must balance speed, security, and scalability across distributed, multi-agent networks.

The Collaboration Challenge in the Agentic Era

From Human Teams to Hybrid Workforces

The workplace has already undergone a dramatic transformation over the past two decades. We moved from conference rooms to video calls, from email chains to real-time messaging platforms, from quarterly planning cycles to agile sprints. But these changes pale in comparison to what's happening now: the rise of digital teammates.

AI agents are no longer experimental tools confined to data science teams. They're embedded in workflows, applications, and infrastructure across the enterprise. A customer service representative might work alongside an AI agent that drafts responses, schedules follow-ups, and escalates issues autonomously. A DevOps engineer might rely on agents that monitor systems, predict failures, and even apply fixes without human intervention. A supply chain manager might coordinate with dozens of AI agents that track shipments, negotiate with suppliers, and optimize routes in real-time.

This shift from synchronous human collaboration to asynchronous and parallel agentic collaboration changes everything. When humans collaborate, we coordinate through meetings, messages, and shared documents. We operate on timescales of minutes, hours, or days. We implicitly understand context, build trust through relationships, and can adapt when plans change.

AI agents, by contrast, can operate in milliseconds. They can process thousands of parallel tasks simultaneously. They can coordinate across time zones without fatigue. But this speed and scale introduces new complexity. How do we maintain coordination when agents act autonomously across different domains and networks? How do we ensure that an agent making a decision in one part of the organization doesn't conflict with decisions being made elsewhere? How do we build systems where hundreds or thousands of agents can work together effectively?

New Vectors of Vulnerability

The promise of agentic collaboration comes with significant risks. Every innovation in coordination creates new attack surfaces and potential failure points.

Consider cross-domain data sharing. When AI agents collaborate across different systems and organizations, they must share information to make decisions. But each data exchange creates an opportunity for interception, manipulation, or leakage. An agent coordinating with external partners might inadvertently expose proprietary algorithms or sensitive customer data. A compromised API endpoint could allow an attacker to inject false information into the decision-making process.

Trust boundaries become dangerously blurred when agents communicate across partner ecosystems or supply chains. In traditional human collaboration, we know who we're talking to. We verify identities through known email addresses, familiar voices, and established relationships. But when Agent A communicates with Agent B across organizational boundaries, how do we verify that Agent B is legitimate? How do we ensure that the data Agent B provides is accurate and hasn't been tampered with in transit?

Real-time systems magnify these risks exponentially. In a batch processing world, there's time to audit transactions, verify outputs, and correct errors before they cause harm. But in real-time systems operating at millisecond latency, one compromised agent can cascade impact across distributed processes before any human notices something is wrong. An AI trading agent that's been subtly manipulated could execute thousands of fraudulent transactions in seconds. A compromised logistics agent could reroute shipments to the wrong destinations or reveal sensitive supply chain information to competitors.

Foundations of Resilient and Secure Coordination

Architectural Principles

Building collaboration systems that can withstand these challenges requires rethinking our architectural approach. Three principles must guide our designs:

Decentralized Resilience: Traditional architectures rely on central coordination points, single sources of truth, and hierarchical command structures. These create single points of failure. In an agentic world, resilience must be distributed throughout the network. Each agent should have enough autonomy and redundancy to continue operating even when connections to other parts of the system fail. This doesn't mean chaos or lack of coordination; it means building systems where redundancy and autonomy are designed into the network from the ground up.

Zero-Trust by Design: The old perimeter security model is dead. We can no longer assume that anything inside our network boundary is trustworthy. In a zero-trust architecture, every agent interaction must be authenticated, authorized, and continuously validated. This applies not just to users but to agents themselves. An agent that was trustworthy yesterday might be compromised today. Every request, every data exchange, every decision must be verified in real-time.

Federated Control: Balancing autonomy with oversight becomes critical when agents operate across organizational boundaries. Pure centralization is impossible when you don't control all the infrastructure. Pure decentralization creates coordination chaos. The answer lies in federated control, where distributed governance and secure enclaves allow agents to operate autonomously within defined boundaries while maintaining visibility and oversight across the broader network.

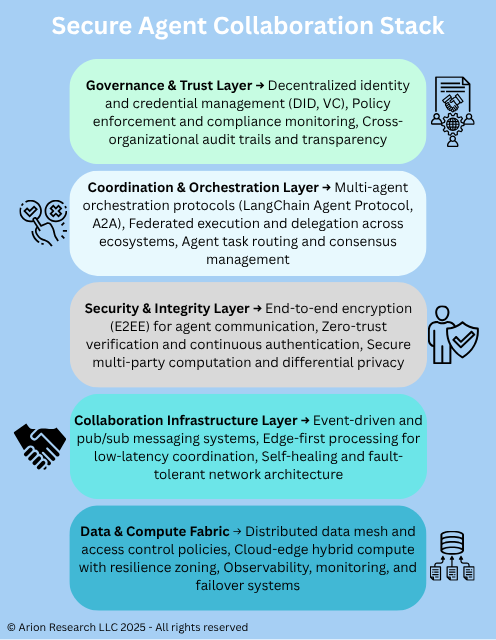

Core Technologies Enabling Security & Scale

These principles come to life through specific technologies that are maturing rapidly:

End-to-end encrypted communication layers ensure that agent-to-agent communication remains secure even when passing through untrusted networks. This goes beyond simple TLS encryption to include secure key exchange protocols, perfect forward secrecy, and authenticated messaging that proves the sender's identity.

Secure multi-party computation (SMPC) and homomorphic encryption enable a powerful capability: agents can collaborate on computations without revealing their underlying data to each other. Imagine three companies wanting to benchmark their performance without revealing actual numbers. Their agents could compute aggregate statistics collaboratively while keeping individual data encrypted throughout the process. This privacy-preserving collaboration will be essential for cross-organizational agent networks.

Blockchain and distributed ledger technologies provide immutable coordination logs. While blockchain often gets dismissed as hype, its core capability (creating tamper-proof records of transactions across untrusted parties) solves real problems in agent coordination. When agents make decisions collaboratively, having an auditable, immutable record of who decided what and when becomes invaluable for compliance and governance, debugging, and trust-building.

Secure orchestration frameworks like LangChain Agent Protocol (LAP) or Agent-to-Agent (A2A) standards create safe communication channels between agents. These frameworks define standard ways for agents to discover each other, negotiate capabilities, exchange data, and coordinate actions while enforcing security policies throughout.

Low-Latency Collaboration: Real-Time without Compromise

Why Latency Matters

Speed isn't just a nice-to-have feature in modern collaboration systems; in many cases, it's the entire point. Consider these time-sensitive use cases:

In autonomous logistics, delays of even seconds can mean missed loading windows, traffic congestion, or failed deliveries. An AI agent coordinating a fleet of delivery vehicles must react to traffic changes, weather conditions, and new orders in real-time, adjusting routes for dozens of vehicles simultaneously.

In financial trading, microseconds matter. High-frequency trading systems already operate at timescales where the speed of light becomes a constraint. As AI agents take on more complex trading strategies, the coordination between risk assessment agents, execution agents, and compliance agents must happen faster than human perception.

In industrial automation, manufacturing systems rely on precise timing. When robotic agents coordinate assembly line operations, sensor agents monitor quality, and maintenance agents predict failures, latency directly impacts production efficiency and product quality.

In customer experience, users expect instant responses. When a customer asks a question, AI agents must coordinate across knowledge bases, transaction systems, and personalization engines to deliver coherent answers in milliseconds, not seconds.

Yet we face what I call the tradeoff triangle: Speed vs. Security vs. Scalability. Traditional thinking suggests you can only pick two. Fast and secure systems don't scale. Scalable and fast systems aren't secure. Secure and scalable systems are slow. But the agentic enterprise demands all three.

Approaches to Achieving Real-Time Coordination

Breaking through the tradeoff triangle requires innovation across multiple layers:

Edge-First Designs move inference and decision-making closer to data sources. Rather than sending all data to centralized servers for processing, edge computing enables agents to run directly where data is generated, whether that's on IoT devices, in local data centers, or at retail locations. This dramatically reduces network latency while improving privacy by keeping sensitive data local.

Event-Driven Architectures replace traditional request-response patterns with asynchronous messaging, reactive streams, and publish-subscribe models. Instead of agents constantly polling for updates, they subscribe to relevant event streams and react instantly when something changes. This approach naturally supports real-time coordination while reducing network overhead and server load.

Local Caching & Consensus Optimization techniques reduce coordination lag between distributed nodes. Agents maintain local caches of frequently accessed data and use sophisticated consensus algorithms to keep caches synchronized. When coordination is required, optimized protocols minimize the number of round-trips needed to reach agreement.

Quantum-safe, low-overhead cryptography addresses a looming challenge. As quantum computers mature, they will break many current encryption schemes. But even before that happens, we need cryptographic approaches that provide strong security without the computational overhead that slows down real-time systems. Emerging post-quantum algorithms combined with hardware acceleration are making near-instant verification possible even with quantum-resistant encryption.

Crossing Organizational Boundaries

The Inter-Enterprise Collaboration Problem

The most complex challenge in agentic collaboration isn't internal coordination. It's getting agents to work together across organizational boundaries.

AI agents must increasingly operate in multi-organizational contexts. A procurement agent at a manufacturer needs to coordinate with supplier agents to optimize inventory. A compliance agent at a healthcare provider must verify data with insurance company agents. A logistics agent at a retailer coordinates with carrier agents, warehouse agents, and customs agents across multiple countries.

But there's no common identity fabric spanning these organizations. Each company has its own authentication systems, access controls, and trust models. An agent that's fully trusted within Company A is a complete unknown to Company B. Traditional solutions like shared credentials or VPN tunnels don't scale to hundreds of organizations and thousands of agents.

Data residency and compliance requirements add another layer of complexity. An agent in Germany must comply with GDPR, ensuring personal data stays within the EU. An agent in healthcare must follow HIPAA rules about patient information. An agent in financial services must meet regional banking regulations. When these agents need to collaborate, they must share insights and coordinate decisions while respecting all relevant compliance boundaries.

Auditability becomes critical when agents share state and make decisions across organizations. If something goes wrong (a failed transaction, a compliance violation, a security breach), you need to reconstruct what happened. But in a distributed system spanning multiple organizations, each with its own logging and monitoring infrastructure, creating a coherent audit trail is enormously challenging.

Emerging Innovations

Several innovations are beginning to address these challenges:

Federated Agent Networks enable distributed systems where agents share insights without sharing raw data. Using techniques like federated learning and differential privacy, agents can collaboratively improve their models or aggregate statistics while keeping their underlying data strictly private. A group of hospitals could train better diagnostic models together without ever exposing individual patient records. A consortium of banks could detect fraud patterns collectively without revealing transaction details.

Interoperable Agent Protocols allow secure, standardized communication across organizational boundaries. Just as HTTP enabled websites to communicate regardless of who built them, agent protocols define standard ways for agents to discover capabilities, negotiate interactions, and exchange messages securely. These protocols handle authentication, authorization, encryption, and auditing in standardized ways that work across organizational boundaries.

Digital Trust Frameworks provide shared governance models and credential systems that span organizations. Decentralized identifiers (DIDs) give agents cryptographically verifiable identities that don't depend on any single organization. Verifiable credentials allow organizations to make claims about their agents (e.g., "this agent is authorized to place orders up to $10,000") in a way that other organizations can verify without contacting the issuer. These technologies, borrowed from the identity and blockchain communities, are being adapted to create trust fabrics for agent collaboration. Think of it like a “digital agent passport”; credentials that establish identity, authority and affiliation.

The New Resilience Model: Self-Healing Collaboration Systems

Resilience in the agentic era looks nothing like traditional disaster recovery. Rather than planning for failures and having humans respond to them, the next generation of collaboration systems must detect, respond to, and recover from failures autonomously.

This starts with continuous monitoring and adaptive response mechanisms. Modern systems generate massive amounts of telemetry: logs, metrics, traces, and events. AI agents can analyze these streams in real-time, detecting anomalies that indicate potential failures, security breaches, or performance degradation. More importantly, they can respond immediately, isolating failing components before problems cascade.

Consider a microservices architecture with hundreds of services and thousands of agent instances. When one service begins behaving abnormally (perhaps due to a memory leak, a corrupted cache, or a subtle attack), traditional monitoring might generate alerts for human operators to investigate. But by the time humans respond, the problem could have affected dozens of downstream services. Self-healing systems detect the anomaly within milliseconds and automatically isolate the affected service, reroute traffic to healthy instances, and spin up replacements.

Autonomous rollback and graceful degradation become essential capabilities under cyber or operational stress. When systems detect they're under attack or experiencing failures, they must be able to automatically revert to known-good states, disable compromised components, and continue operating in a degraded mode rather than failing completely. This might mean temporarily reducing accuracy in favor of availability, falling back to simpler algorithms that are less resource-intensive, or switching to manual approval for high-risk decisions.

Perhaps most exciting is the role of agentic AI in predictive resilience. Rather than merely reacting to failures, agents can anticipate and prevent failures before they happen. By analyzing patterns in telemetry data, agents can predict when hardware is likely to fail, when services are approaching capacity limits, or when security threats are emerging. This shifts resilience from reactive to proactive.

Consider these real-world examples already emerging:

Supply chain agent networks can detect disruptions (weather events, port delays, supplier issues) before they impact deliveries. When a typhoon threatens a major shipping route, logistics agents automatically reroute shipments, adjust production schedules, and notify affected customers before any delays occur.

AI-powered DevOps agents continuously monitor microservice dependencies, code quality metrics, and deployment patterns. They detect when a new code release might cause issues, when dependencies are becoming outdated and risky, or when service interactions are creating subtle bugs. They can automatically repair microservice dependencies, apply security patches, and even roll back problematic deployments without human intervention.

This level of autonomous resilience isn't just about convenience. In systems operating at the speed and scale of agentic collaboration, human response time simply isn't fast enough. Self-healing capabilities become necessary for survival.

Looking Ahead: Designing the Next Generation of Secure Collaboration Frameworks

Principles for Future-Ready Architectures

As we design the collaboration systems that will power the agentic enterprise, three principles should guide our work:

Security by Default: Security cannot be an afterthought or an optional feature. Every agent interaction must be verified, logged, and governed from the ground up. This means building security into protocols, APIs, and frameworks at the design level, not bolting it on later. It means treating all agents as potentially compromised and requiring continuous authentication. It means assuming that networks are hostile, data can be intercepted, and systems will be attacked.

Interoperability First: The future isn't a single vendor's platform or a proprietary protocol. It's an ecosystem of diverse agents, systems, and organizations that must work together seamlessly. Open protocols enabling coordination between digital ecosystems will be as important to the agentic era as TCP/IP was to the internet era. We must design for interoperability from day one, creating standards that allow agents to collaborate regardless of who built them or which organization deployed them.

Adaptive Resilience: Static security and resilience approaches fail in dynamic environments. Systems must be self-optimizing, evolving with threat landscapes and workload patterns. This means building systems that learn from attacks and adapt their defenses. It means creating architectures that can dynamically reconfigure themselves as traffic patterns change, new threats emerge, or business requirements evolve. Adaptive resilience isn't a feature you implement once. It's an ongoing capability that improves over time.

The Path to Standardization

The good news is we're not starting from scratch. Important standardization efforts are already underway:

The LangChain Agent Protocol defines patterns for agent-to-agent communication, capability discovery, and secure message exchange in language model-based systems. While initially focused on LangChain applications, the protocol's patterns are influencing broader agent communication standards.

The AI Exchange Protocol proposes standards for how AI systems can discover, negotiate with, and collaborate with other AI systems, including secure handshake procedures and capability exchange formats.

The Agent-to-Agent (A2A) standard tackles the specific challenges of agent identity, authorization, and secure message passing across trust boundaries. It defines how agents prove their identity, what permissions they have, and how those permissions can be verified by other agents.

W3C Verifiable Credentials and decentralized identifiers, while developed for human identity, are being adapted for agent identity. These standards provide cryptographically secure ways to issue, present, and verify claims about agents' capabilities and authorizations.

Beyond these technical standards, we need cross-agent governance and audit frameworks. Industry consortia, regulators, and open-source communities all have roles to play in defining what "safe collaboration" means, how we verify that systems meet safety requirements, and how we handle incidents when things go wrong.

The challenge isn't just technical specification; it's governance. Who decides what behaviors are acceptable for autonomous agents? How do we handle disputes when agents from different organizations make conflicting decisions? What recourse exists when an agent causes harm? These questions require collaboration between technologists, policymakers, lawyers, and ethicists.

The Trust Layer for the Digital Workforce

The next evolution of digital collaboration isn't just about speed, though real-time coordination matters. It isn't just about intelligence, though AI capabilities continue to advance rapidly. The next innovation is about trust.

Building trust and resilience into every agent interaction will define the success of the agentic enterprise. Organizations that master secure, low-latency, resilient collaboration across agent networks will gain enormous competitive advantages. They'll be able to coordinate complex operations faster, respond to disruptions more effectively, and collaborate with partners more seamlessly than their competitors.

But those that fail to get security and resilience right will face catastrophic risks. A compromised agent network could leak sensitive data, execute fraudulent transactions, or disrupt critical operations. A brittle system that fails under stress could cascade into business-threatening outages. A collaboration architecture that can't scale could become a bottleneck as agent deployments grow.

The vision we must work toward is clear: a secure, low-latency coordination fabric where human and AI agents collaborate seamlessly across ecosystems. This fabric will be built on zero-trust principles, secured by end-to-end encryption and privacy-preserving computation, and made resilient through self-healing capabilities and adaptive defenses. It will use open, interoperable protocols that work across organizational boundaries. And it will balance the need for speed with the imperatives of security and reliability.

We're in the early stages of this journey. The technologies exist or are emerging. The standards efforts are underway. The architectural patterns are being proven in production deployments. What we need now is sustained focus, collaboration across organizations and communities, and commitment to building not just fast systems or smart systems, but trustworthy systems.

The agentic enterprise depends on it. The future of digital collaboration demands it. And the pace of technological change means we must act now to build the trust layer that will enable the digital workforce to reach its full potential.

The convergence of resilience, security, and real-time collaboration isn't just a technical challenge. It's the foundation upon which the next generation of business, innovation, and human-AI partnership will be built.