Conflict Resolution Playbook: When Agents (and Organizations) Clash

“Conflict isn’t failure; it’s feedback. The future of AI governance will depend on how well our agents learn to disagree.”

When Digital Teammates Disagree

The future of work isn't just human anymore. As AI agents become autonomous team members and entire organizations operate through distributed agent networks, we face a new challenge: what happens when these digital workers disagree?

Picture this: Your AI purchasing agent decides to order supplies from Vendor A based on cost optimization, while your sustainability agent flags Vendor A for poor environmental practices. Your scheduling agent books critical compute resources at 2 PM, but your analytics agent already reserved that same time slot for quarterly reporting. These aren't edge cases. They're the daily reality of multi-agent systems.

Just as human organizations have HR departments, management hierarchies, and conflict resolution procedures, our digital workforces need structured approaches to handle disagreement. The difference? Agent conflicts happen at machine speed, across distributed networks, with consequences that can cascade through entire ecosystems in milliseconds. The goal isn't to eliminate conflict. It's to harness it as valuable feedback that makes our agent systems smarter, more resilient, and ultimately more aligned with human values.

The Nature of Conflict in Multi-Agent Systems

What Makes Agents Clash?

Agent conflicts arise from predictable sources, many of which mirror human organizational tensions:

Resource contention sits at the heart of most disputes. When multiple agents need the same compute cluster, database access, or API rate limits, someone loses. Unlike humans who might politely defer or negotiate over coffee, agents lacking proper protocols can create deadlock situations or race conditions that freeze entire workflows.

Goal misalignment occurs when agents optimize for different metrics. A customer service agent trained to maximize satisfaction might promise same-day delivery, while a logistics agent optimizing for cost efficiency plans three-day shipping. Both agents are doing their jobs perfectly. The conflict arises from incomplete coordination of their objectives.

Ethical and policy divergence creates some of the trickiest scenarios. Imagine an AI hiring agent that optimizes purely for resume keywords, while a fairness agent monitors for demographic bias. Their different ethical boundaries create tension that requires careful arbitration.

Communication failures plague distributed systems. When Agent A sends a message to Agent B, latency, dropped packets, or ambiguous formatting can create misunderstandings. An agent might interpret silence as agreement when it's actually a network timeout.

The Human-Organization Parallel

These agent conflicts aren't fundamentally different from human workplace disputes. When two department heads fight over budget allocation, that's resource contention. When sales promises features that engineering hasn't planned, that's goal misalignment. When legal blocks a marketing campaign for compliance reasons, that's ethical divergence.

The parallels run deep. Agents, like employees, can be territorial about their domains. They respond to incentive structures that may not align with organizational goals. Power asymmetries emerge when certain agents control critical resources or have stronger influence in decision-making processes.

Understanding these parallels helps us adapt decades of organizational behavior research to agent system design. The insights from management science, game theory, and institutional economics directly apply to multi-agent coordination.

Conflict as Feature, Not Bug

Well-designed systems treat conflict as valuable feedback rather than system failure. When agents disagree, they're surfacing genuine tensions in priorities, constraints, or information that human designers may have overlooked.

A conflict between cost optimization and quality assurance agents reveals an unresolved business question: where does the company want to position itself on the quality-price spectrum? The agents aren't malfunctioning. They're exposing a strategic ambiguity that needs human resolution.

Smart systems log these conflicts, analyze their patterns, and use them to evolve better coordination strategies. Each resolved dispute becomes training data for more nuanced future decision-making.

Foundational Protocols for Resolution

Governance Structures: Who Decides?

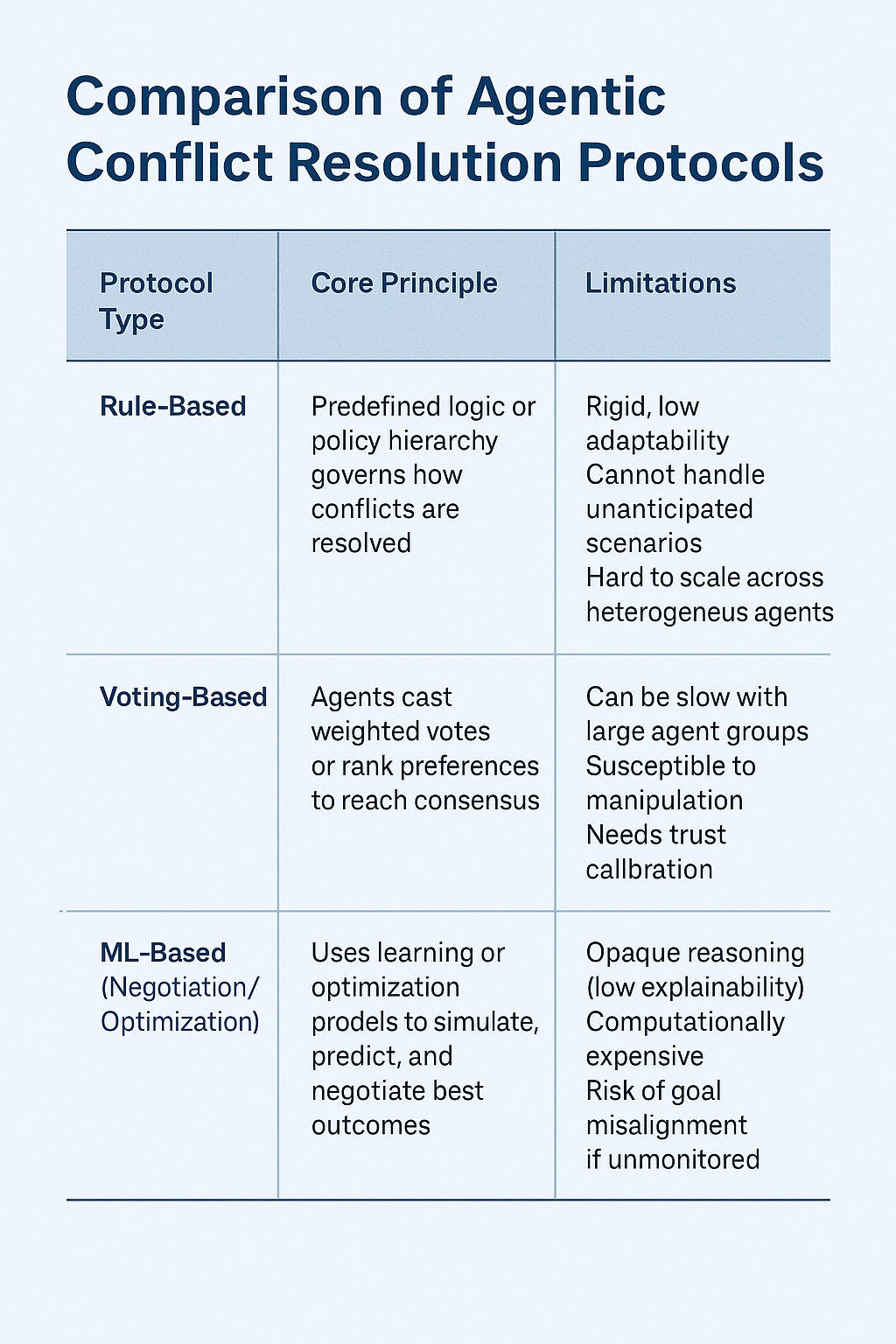

The architecture of authority shapes how agents resolve disputes. Three primary models dominate:

Hierarchical control mirrors traditional corporate structures. A supervisor agent (or human overseer) makes final decisions when subordinate agents disagree. This model scales well for clear authority domains and provides fast resolution, but creates bottlenecks and single points of failure. The supervisor becomes overwhelmed as the number of conflicts grows.

Federated models distribute authority across domains. The database access agent governs storage conflicts, the security agent arbitrates authentication disputes, and the resource scheduler manages compute allocation. This approach scales better but requires clear domain boundaries and handoff protocols when conflicts span multiple jurisdictions.

Decentralized control eliminates central authority entirely. Agents negotiate peer-to-peer, using consensus mechanisms to reach collective decisions. This approach maximizes resilience and avoids bottlenecks but can be slower and may struggle with urgent, time-sensitive conflicts.

For blockchain-based or DAO (Decentralized Autonomous Organization) agents, smart contracts provide on-chain arbitration. The resolution logic lives in immutable code that all parties trust, creating transparent, auditable conflict resolution without central intermediaries.

Priority Rules: The Pecking Order of Goals

When agents with equal authority clash, priority rules provide tie-breakers:

Rule-based hierarchies encode explicit preferences. "Safety over speed" means the security agent can always override the performance optimizer. "Customer satisfaction over cost reduction" gives the support agent veto power on budget-cutting measures. These rules work well when priorities are clear and stable, but struggle with nuanced trade-offs.

Context-aware prioritization adjusts rankings dynamically. During normal operations, cost optimization might trump speed. But when system health metrics show critical issues, the reliability agent gains priority. During a security incident, the security agent's decisions override all others. During peak business hours, customer-facing agents get preference for shared resources.

This dynamic re-ranking requires agents to monitor system state and adjust their negotiation strategies accordingly. An agent that typically defers might assert itself when context indicators show its domain becoming critical.

Timeboxing and Deferral: Buying Time to Think

Not every conflict needs immediate resolution. Timeboxing gives agents a fixed window to negotiate before escalating to higher authority. If two agents can't agree on resource allocation within 30 seconds, a supervisor intervenes.

Deferral mechanisms temporarily table conflicts when immediate resolution isn't critical. If two analytics agents want the same data processing window but neither has urgent deadlines, the system queues the conflict for later resolution when more information is available or resource pressure decreases.

These temporal strategies prevent minor disagreements from escalating into system-wide deadlocks. They also buy time for more sophisticated negotiation processes to run.

Voting and Consensus Mechanisms

Classical Voting Systems Adapted for Agents

When multiple agents must collectively decide, voting provides a structured approach:

Majority voting works for binary decisions with equal-weight agents. Should the system proceed with a deployment? If more than 50% of monitoring agents report green status, deploy. Simple, fast, but vulnerable to poorly calibrated agents all voting incorrectly together.

Ranked-choice voting handles multi-option scenarios better. Five agents must choose among three vendors. Each agent ranks all options. The system eliminates the lowest-ranked option and redistributes those votes until one option has majority support. This approach better captures nuanced preferences and prevents polarization around extreme options.

Weighted voting accounts for expertise and track record. An agent with 95% historical accuracy gets more vote weight than one with 70% accuracy. The security agent's vote might count triple when the decision involves authentication protocols, while the cost optimizer gets extra weight on procurement decisions.

Consensus Protocols from Distributed Computing

The world of distributed systems offers battle-tested consensus algorithms that agents can adapt:

Paxos and Raft solve the problem of getting multiple nodes to agree on a single value even when some nodes fail or messages get lost. For agents, this translates to reliable collective decision-making across unreliable networks. When five logistics agents across different data centers need to agree on a delivery route, Raft ensures they reach consensus even if one agent crashes mid-negotiation.

Byzantine Fault Tolerance (BFT) handles malicious or corrupted agents. While Paxos assumes agents are honest but might fail, BFT works even when some agents intentionally provide false information. For high-stakes scenarios like financial transactions or safety-critical systems, BFT protocols ensure the honest majority can reach correct decisions despite bad actors.

The computational overhead of these protocols is significant, so systems typically reserve them for critical decisions where reliability trumps speed.

Reputation-Based Weighting: Earning Influence Over Time

Static weighting based on agent type isn't enough. Dynamic reputation systems adjust influence based on performance history:

An agent that consistently makes accurate predictions earns higher reputation scores, giving its votes more weight. An agent that repeatedly triggers false alarms sees its influence decrease. This creates incentive alignment: agents that perform well gain more control over collective decisions.

Example in action: Three procurement agents debate compute resource allocation. Agent A proposes reserving capacity for predicted traffic spikes. Agent B suggests spreading resources evenly. Agent C recommends cost-saving spot instances. The system checks their historical accuracy:

- Agent A: 87% accuracy on traffic predictions, reputation score 8.7

- Agent B: 72% accuracy, reputation score 7.2

- Agent C: 81% accuracy, reputation score 8.1

Agent A's proposal carries 8.7 "votes," Agent B's carries 7.2, and Agent C's carries 8.1. Agent A wins the stake-weighted decision. But if Agent A's prediction proves wrong, its reputation drops, reducing its influence in future debates.

Machine Learning-Based Negotiation

Negotiation Models: Teaching Agents to Bargain

Machine learning transforms conflict resolution from rigid rules to adaptive bargaining:

Reinforcement learning (RL) for iterative negotiation lets agents learn optimal bargaining strategies through experience. Two agents negotiate data storage allocation. Each round, they propose splits. If they agree, both get rewards proportional to their share. If they deadlock, both get penalties.

Over thousands of simulated negotiations, agents learn patterns: starting with moderate proposals works better than extreme demands. Making small concessions signals willingness to cooperate. Reciprocating the other agent's concessions builds trust and accelerates agreement.

Generative negotiation uses AI models to simulate possible resolutions before committing to one. Before sending a counteroffer, an agent generates 100 potential negotiation paths and their likely outcomes. It chooses the strategy with the highest expected value, accounting for the other agent's likely responses.

This approach works particularly well in complex, multi-issue negotiations where agents must trade off across multiple dimensions simultaneously.

Multi-Objective Optimization: Balancing Competing Metrics

Real conflicts rarely involve a single metric. An agent must balance cost, latency, fairness, accuracy, and energy consumption simultaneously. Multi-objective optimization techniques help agents find solutions that satisfy multiple constraints:

Pareto optimization identifies solutions where improving one metric requires sacrificing another. The set of all such solutions creates a "Pareto frontier." Rather than arguing over which single metric matters most, agents can explore the frontier and choose solutions based on current context.

During normal operations, a system might operate at a Pareto-optimal point favoring cost efficiency. During peak demand, it shifts to a different point on the frontier favoring latency. Same fundamental trade-offs, different contextual priorities.

Weighted scalarization converts multiple objectives into a single score. An agent combines 0.4 * cost + 0.3 * speed + 0.2 * accuracy + 0.1 * energy efficiency into one metric to optimize. The weights encode relative importance and can adjust dynamically.

Cooperative vs. Competitive Frameworks

The mathematical framework shapes negotiation dynamics profoundly:

Nash equilibria from game theory identify stable states where no agent benefits from unilaterally changing its strategy. These work well for competitive scenarios where agents have genuinely opposed interests. But Nash equilibria can be suboptimal for the collective. Both agents might be better off cooperating, but individual incentives keep them locked in a less efficient equilibrium.

Cooperative game theory explores scenarios where agents can form coalitions and make binding agreements. The Shapley value, for instance, fairly allocates rewards to agents based on their marginal contribution to coalition success. This encourages agents to share information and coordinate rather than purely competing.

Mechanism design takes a different approach: how do you structure the rules so that agents pursuing their self-interest produce the desired collective outcome? Auction mechanisms, for example, extract honest valuations from strategic agents by making truthful bidding the optimal strategy.

Real-World Parallels

ML-driven negotiation already shapes critical infrastructure:

Supply chain coordination involves agents negotiating inventory levels, production schedules, and logistics routing across company boundaries. Amazon's fulfillment network, for instance, coordinates thousands of decisions hourly about which warehouse ships which package through which carrier.

Financial trading pits algorithmic agents against each other in negotiations over price, timing, and volume. High-frequency trading systems negotiate implicitly through bid-ask spreads, learning counterparty patterns and adjusting strategies millisecond by millisecond.

Autonomous vehicle coordination at intersections requires real-time negotiation of right-of-way, merging priority, and route selection. Agents must balance individual travel time with overall traffic flow, all while maintaining safety guarantees.

These systems already implement versions of the negotiation frameworks described above, though often in domain-specific ways. The challenge ahead is building general-purpose negotiation protocols that work across domains.

Ethical and Safety Layers

Guardrails: Keeping Ethics in the Room

Effective negotiation can't mean "anything goes." Ethical principles must constrain the solution space:

Hard constraints define boundaries agents cannot cross regardless of efficiency gains. A healthcare agent cannot violate HIPAA privacy rules even if sharing patient data would improve diagnostic accuracy. A financial agent cannot engage in market manipulation even if it increases returns. These constraints are non-negotiable and enforced at the system level.

Soft constraints introduce ethical considerations as weighted factors in optimization. A hiring agent that must balance cost efficiency with fairness might use: 0.5 * qualifications + 0.3 * cultural_fit + 0.2 * diversity_contribution. The diversity term doesn't mandate outcomes, but ensures ethical concerns influence decisions.

Value alignment mechanisms encode human preferences as reward functions. An agent learns not just "maximize profit" but "maximize profit while respecting labor standards, environmental impact, and stakeholder trust." These multi-dimensional reward functions shape how agents trade off competing values during negotiation.

The critical insight: ethics cannot be an afterthought. Guardrails must be architecturally integrated, making unethical resolutions literally impossible rather than merely discouraged.

Audit Trails: Learning from Every Conflict

Every conflict and resolution should be logged to a tamper-evident audit trail:

What was disputed? The specific resources, decisions, or priorities in conflict.

Who participated? All agents involved, their stated preferences, and their reputation scores at the time.

How was it resolved? The negotiation process, any voting that occurred, the final decision, and the authority (algorithmic or human) that made it.

What were the outcomes? Did the resolution achieve its intended results? Were there unintended consequences?

This audit trail serves multiple purposes. It provides accountability when things go wrong. It creates training data for improving future conflict resolution. It lets human supervisors spot systematic issues indicating deeper problems in goal specification or incentive alignment.

In regulated industries, these audit trails may be legally required. Even when not required, they're invaluable for system improvement.

Oversight and Escalation: Knowing When to Call a Human

Automation shouldn't mean removing humans from critical decisions. Well-designed systems know their limits:

Escalation triggers include:

- High-stakes decisions above a threshold (e.g., resource commitments over $100,000)

- Conflicts that remain unresolved after N rounds of negotiation

- Situations where agent confidence scores are uniformly low

- Novel scenarios outside the agents' training distribution

- Ethical ambiguities where the right answer isn't clear

Meta-agents can monitor lower-level negotiations and decide when escalation is warranted. These supervisory agents don't make the decisions themselves, but recognize situations requiring human judgment.

Human-in-the-loop protocols define how escalation works in practice. Does the system pause while awaiting human input? Does it implement a default safe action? How quickly must humans respond? What information do they need to make informed decisions?

The goal is keeping humans engaged with consequential choices while offloading routine coordination to agents.

Cross-Organizational Conflict Protocols

When Agents from Different Companies Must Cooperate

The most complex scenarios involve agents that don't share a common owner or infrastructure:

Inter-enterprise collaboration is increasingly common. A procurement agent from Company A negotiates with a sales agent from Company B. A logistics agent coordinates with a warehouse agent across company boundaries. A compliance agent from a regulated firm must verify actions of a vendor's service agent.

These negotiations happen through APIs, message buses, or blockchain networks. But unlike intra-organizational conflicts, there's no shared authority to appeal to. Resolution must emerge from peer negotiation or pre-agreed protocols.

Trust and Verification Mechanisms

Cross-organizational agent interaction requires robust trust infrastructure:

Cryptographic identity ensures agents can verify who they're negotiating with. Digital signatures and certificates confirm that the agent claiming to be Company B's authorized procurement system really is, and hasn't been spoofed by an attacker.

Secure enclaves allow agents to share sensitive information or execute joint computations without fully trusting each other. Using technologies like Intel SGX or confidential computing, agents can prove they're running agreed-upon code even if they can't see each other's internal logic.

Reputation exchanges let agents query third-party services for their counterparty's track record. Before negotiating a major contract, Company A's agent checks Company B's agent's history of fulfilling commitments, payment reliability, and dispute frequency. This builds on the same reputation systems discussed earlier but extends them across organizational boundaries.

Verifiable credentials provide cryptographic proof of attributes without revealing underlying data. An agent can prove it has authority to commit up to $1 million in resources without disclosing its actual budget or decision-making process.

Conflict Arbitration Frameworks

When cross-organizational conflicts can't be resolved directly, pre-agreed arbitration mechanisms take over:

Multi-party smart contracts encode dispute resolution logic that all parties agree to before interacting. If Company A's delivery agent and Company B's receiving agent disagree about whether goods arrived on time, the smart contract automatically checks timestamped proof-of-delivery data and weather records (checking for force majeure), then executes the pre-agreed resolution (refund, rescheduling, penalty payments, etc.).

These contracts can be surprisingly sophisticated, encoding decision trees that account for many contingencies. The key advantage: all parties know the rules in advance, the resolution is automatic and transparent, and there's no need for expensive legal proceedings.

Consortium governance creates shared oversight for ecosystems of collaborating companies. Industry participants jointly fund and govern a neutral arbitration service. When conflicts arise, this service applies pre-agreed rules or provides expert human arbitration when automated resolution fails.

Example: Company A runs an AI purchasing agent optimizing for cost and delivery speed. Company B operates a logistics AI optimizing for route efficiency and fuel consumption. They negotiate a delivery contract:

The agents exchange capability declarations through a standardized API. Company A's agent proposes: "Deliver 1,000 units to Denver by March 15, budget $5,000." Company B's agent analyzes its routes and constraints, counteroffers: "$5,200 for March 15, or $4,800 for March 20."

Company A's agent has learned through RL that accepting slight delays for 8% cost savings typically improves total performance in its evaluation framework. It accepts March 20 delivery.

The smart contract locks in terms: $4,800, 1,000 units, Denver, March 20. Both agents cryptographically sign. The contract monitors execution: Company B's logistics agent provides tracking updates to the blockchain. On March 19, the receiving agent at Company A's warehouse cryptographically confirms delivery. The smart contract automatically releases payment.

If Company B had been late, the contract would automatically calculate penalties per the pre-agreed terms and adjust payment. No human intervention needed unless the conflict was outside the contract's encoded decision tree.

Designing a Conflict Resolution Layer

Architectural Blueprint

A complete conflict resolution system follows a structured pipeline:

1. Detection: Monitoring agents continuously watch for conflicts. This includes obvious direct disputes (two agents requesting the same resource) but also subtler misalignments (agents making decisions that create downstream conflicts for other agents, goal drift where an agent's actions diverge from its intended objectives).

Detection systems must be proactive, not reactive. By the time agents are in explicit disagreement, the conflict may have already caused problems. Pattern recognition identifies emerging tensions before they escalate.

2. Analysis: Once a conflict is detected, the system analyzes its nature. Is this a resource contention issue (needs scheduling), a goal misalignment (needs policy clarification), or an ethical boundary disagreement (needs human oversight)?

The analysis phase also assesses stakes. A conflict over 0.01% of system resources might resolve through simple randomization. A conflict over safety-critical decision-making requires much more careful handling.

3. Negotiation: Based on the conflict type and stakes, the system routes to appropriate negotiation mechanisms. Low-stakes resource conflicts might use simple priority rules. Medium-stakes goal conflicts might trigger multi-round RL-based bargaining. High-stakes ethical conflicts escalate to human oversight.

This phase implements the voting systems, consensus protocols, and ML negotiation models discussed earlier.

4. Resolution: The negotiation produces a decision. This decision is implemented, monitored for compliance, and enforced if necessary. Enforcement might mean allocating the disputed resource to the winning agent, updating configuration files to reflect new priorities, or triggering compensating actions if one agent must give up something valuable.

5. Learning: Resolution outcomes feed back into the system. Did the resolution achieve intended goals? Were there unintended consequences? Should the protocols, weights, or prioritization rules be adjusted?

This feedback creates a continuous improvement loop. Early conflicts are messy and require frequent escalation. Over time, the system learns patterns and develops more sophisticated, autonomous resolution capabilities.

Integration with Governance and Policy Layers

Conflict resolution doesn't exist in isolation. It must integrate with broader agent governance:

Policy engines define the rules, constraints, and priorities that shape negotiation. When policies change (new regulations, strategic pivots, ethical guidelines), the conflict resolution system must adapt accordingly.

Compliance monitoring ensures resolutions don't violate regulatory requirements. This is especially critical in regulated industries where certain conflict resolutions might technically optimize immediate objectives but create legal exposure.

Agent lifecycle management tracks agents being deployed, updated, or retired. New agents might not yet have established reputations. Retiring agents shouldn't influence critical decisions. The conflict resolution system must account for this lifecycle context.

Digital workforce orchestration coordinates not just conflict resolution but overall agent coordination. The conflict resolution layer is one component of a broader agentic AI Ops stack that includes provisioning, monitoring, optimization, and governance.

Feedback to Model Training

Perhaps most importantly, conflict outcomes inform how agents themselves are trained:

Preference learning extracts insights about true system priorities from resolved conflicts. If security agents consistently win disputes with efficiency agents, the system learns that security is weighted more heavily than initially specified. This can then feed back into agent training, making future agents better aligned from the start.

Adversarial training uses conflict scenarios as training data. Agents learn to anticipate how their proposed actions might conflict with others and proactively adjust to minimize disputes. This reduces conflict frequency over time.

Coordination skill development teaches agents to recognize situations where direct communication and coordination are more efficient than negotiation. Rather than fighting over resources, agents learn to share information about their needs and find mutually beneficial schedules proactively.

Future Directions: Self-Governing AI Ecosystems

Evolution Toward Self-Moderating Systems

The ultimate goal is agent ecosystems that handle increasingly complex conflicts without human intervention:

Meta-learning in conflict resolution means agents don't just resolve individual disputes, but learn to improve their resolution processes. They recognize patterns like "conflicts of type X resolve best using mechanism Y" and automatically route future similar conflicts to the most effective protocol.

Emergent governance structures might develop organically rather than being explicitly designed. As agents interact repeatedly, informal hierarchies, alliances, and conventions emerge. Some agents naturally become trusted arbitrators. Others specialize in particular conflict domains. The system self-organizes into effective governance without central planning.

Adaptive protocol evolution allows the conflict resolution mechanisms themselves to change over time. Early in a system's life, simple rules work fine. As complexity grows and edge cases accumulate, more sophisticated protocols emerge. Rather than humans updating the rules manually, the system proposes, tests, and adopts improved protocols automatically.

Simulation Environments for Training Negotiation Resilience

Before deploying agents into production, we can train their conflict resolution skills in simulation:

Synthetic conflict generation creates challenging scenarios agents must navigate. What happens when three agents all need the same critical resource simultaneously? When an agent receives contradictory instructions from different supervisors? When network partitions make some agents unreachable during negotiation?

By exposing agents to thousands of conflict scenarios in simulation, we build resilience before real stakes come into play. Agents learn edge cases, develop robust fallback strategies, and identify situations where their protocols break down.

Multi-agent reinforcement learning (MARL) environments let agents train together, learning from each other's strategies. Competitive pressure drives innovation. Agents that develop superior negotiation tactics spread their approaches through imitation or through being selected for deployment more frequently.

Adversarial testing puts agents against intentionally difficult opponents trying to exploit weaknesses in their negotiation logic. This identifies vulnerabilities before malicious actors do.

The Rise of Digital Diplomacy

As agent-to-agent interactions span organizations and even jurisdictions, we may see the emergence of "digital diplomacy":

Intermediary agents specialize in facilitating agreements between other agents. They don't have their own stakes in the conflict but act as neutral brokers, translators of different protocol languages, or trusted escrow services.

Cross-ecosystem protocols standardize how agents from different organizations, different industry sectors, or different regulatory regimes interact. Just as human diplomacy established international norms like diplomatic immunity, digital diplomacy might create conventions for agent interaction, data exchange standards, and dispute resolution procedures.

Agent rights and responsibilities frameworks might emerge. If an agent makes a binding commitment, what obligations does its operating organization have to honor that commitment? If an agent causes harm through its actions, who is liable? These questions will require new legal and technical frameworks.

Research Frontiers

Several areas need substantial development:

Hybrid human-agent arbitration boards combine human judgment on ethical and contextual questions with agent capabilities for rapid data analysis and rule application. The human panel might set high-level direction while agent systems implement and monitor detailed resolutions.

Meta-governance protocols create governance for governance. How do we decide which conflict resolution protocols to use? How do we resolve meta-conflicts about whether a conflict resolution was fair? These recursive questions require careful protocol design.

Ethical AI treaties between organizations might establish shared norms for agent behavior. Participating companies commit their agents to certain baseline ethical standards, creating trust and enabling collaboration even between commercial competitors.

Formal verification of resolution protocols uses mathematical proof techniques to guarantee that conflict resolution mechanisms have certain properties: they always terminate (no infinite loops), they're fair under specific definitions, they respect safety constraints, and they can't be gamed by strategic agents.

Psychological modeling of agent conflict explores whether agent conflicts exhibit patterns similar to human cognitive biases, emotional escalation, or irrational commitment to losing strategies. If so, can we design interventions that de-escalate conflicts and improve resolution quality?

Managing Conflict

As we build increasingly sophisticated agent systems, conflict becomes not a bug to be eliminated but a design challenge to be managed. The agents deployed across your organization tomorrow will disagree, compete for resources, and face ethical dilemmas. How those conflicts get resolved will determine whether your agent ecosystem is resilient and aligned or fragile and chaotic.

The frameworks outlined in this playbook offer a starting point: governance structures that define authority, priority rules that encode values, voting and consensus mechanisms that aggregate preferences, machine learning models that enable adaptive negotiation, and ethical guardrails that keep systems aligned with human values.

These systems increasingly mirror and may eventually surpass human organizational sophistication. Just as human societies evolved from simple tribal hierarchies to complex governance institutions over millennia, agent systems will evolve their own forms of coordination, cooperation, and collective decision-making. The difference is the timescale. What took human societies centuries might take agent ecosystems years or even months.

For AI architects: Embed conflict resolution mechanisms early in system design. Don't treat coordination as an afterthought once agents are already deployed and struggling to coexist. Design for conflict from day one.

For policymakers: Begin thinking now about standards, regulations, and frameworks for multi-agent conflict resolution. The questions of agent authority, liability for agent decisions, and cross-organizational agent interaction will need answers before the technology fully matures.

For researchers: The open problems are immense and fascinating. Formal methods for verifying protocol properties. Machine learning techniques for learning better negotiation strategies. Ethical frameworks for acceptable conflict resolution outcomes. Mechanisms for human oversight that scale. This field is rich with opportunities.

The age of truly autonomous, multi-agent AI systems is not a distant future. It's unfolding now in supply chains, financial markets, cloud infrastructure, and digital workplaces. The organizations that thrive will be those that design not just capable agents, but capable agents that can work together despite their differences.

Conflict, properly managed, makes systems stronger. It surfaces hidden tensions, tests assumptions, and drives evolution toward more robust coordination. Build your conflict resolution playbook now. Your digital workforce will thank you for it.