Synthetic Sensors and Surrogate Data: How Agentic AI Fills Gaps and Fights Fraudulent IoT Streams

The Fragility of Real-World Data

What happens when your sensors lie, or simply go silent?

In our hyper-connected world, IoT systems power our most critical infrastructure. Smart factories run around the clock. Energy grids balance supply and demand in real time. Logistics networks track millions of shipments across continents. All of this depends on one thing: continuous, trustworthy data streams flowing from thousands of sensors.

But here's the problem. These sensors fail. They drift out of calibration. They get corrupted by noise. Sometimes, they're deliberately spoofed by bad actors. When the data stops flowing or starts lying, the consequences ripple through entire systems. Automation breaks down. Analytics produce garbage. Safety mechanisms fail to trigger when they should, or trigger when they shouldn't.

The traditional answer has been redundancy and maintenance. Add more sensors. Check them more often. But there's another way, one that doesn't just patch the problem but reimagines how we think about sensing itself. Agentic AI offers a path beyond physical sensors, using intelligence and inference to synthesize and validate the data we need.

The Problem: Data Gaps and Rogue Streams

Sensor networks face predictable, persistent challenges. Hardware degrades over time. Connections drop in and out. Environmental conditions introduce noise. Calibration drifts slowly, imperceptibly, until readings are meaningless. In older systems or harsh environments, you simply can't place sensors everywhere you need them.

Then there are the attacks. Malicious actors can tamper with sensors or spoof their signals. In an era where everything connects to everything, sensor data becomes a target for disruption and deception.

The consequences play out across industries. In predictive maintenance, false alarms cost time and money while real problems go undetected. Supply chains misallocate resources based on bad location or condition data. Smart grids and energy management systems develop vulnerabilities that can cascade into failures. The data we depend on can't always be depended upon.

Enter Synthetic Sensing and Surrogate Data

So what if we could generate the data we need when our sensors fail us?

Synthetic sensors are AI-generated estimates of physical measurements, derived from contextual or related signals. When a temperature sensor goes offline, can we infer temperature from energy consumption, airflow rates, and nearby sensor readings? Often, yes.

Surrogate data takes this further. It's reconstructed or simulated data that approximates missing or unreliable inputs, filling gaps to keep systems running smoothly.

Think of how weather models work. When a weather station goes offline, meteorologists don't just throw up their hands. They interpolate, using readings from surrounding stations and atmospheric models to estimate what's happening in that blind spot. Agentic AI applies the same principle across IoT networks, filling in blind spots intelligently rather than leaving dangerous gaps.

This matters for three reasons. It enables continuous operations when sensors fail. It enhances resilience against both accidents and attacks. And it prevents fraud by detecting when data doesn't match the patterns it should.

How Agentic AI Fills the Gaps

Multi-Source Triangulation

Agentic systems don't rely on single sources. They correlate multiple inputs, weaving together evidence from across the network and beyond. Within a sensor network, agents cross-validate readings, checking each sensor against its neighbors. They pull in external data feeds like weather services, geospatial information, and logistics tracking. They compare current readings against historical trends and temporal patterns.

Here's an example. A temperature sensor in a manufacturing facility suddenly reports a spike. A traditional system might trigger an alarm immediately. An agentic system pauses. It checks the energy consumption logs. Are they showing the increased load you'd expect from a heating system working harder? It examines airflow sensor data. Is air moving differently? It looks at nearby temperature sensors. Are they showing similar changes? Only after this triangulation does the agent decide whether to trust the reading, flag it as suspicious, or discard it as noise.

Synthetic Estimation and Inference

When data is truly missing, agentic AI can generate synthetic readings based on contextual understanding. This isn't guesswork. It's inference grounded in models trained on how systems actually behave.

Predictive digital twins simulate what a missing sensor would report based on the state of the system. Time-series forecasting fills short-term gaps by extending recent trends forward. Causal reasoning ensures that synthetic values stay consistent with physical laws and system dynamics.

The key benefit is continuity. Operations keep running. Analytics continue. Decision-making doesn't halt. But the system also tracks uncertainty. It knows when it's working with real data versus inferred data, and it adjusts confidence levels accordingly.

Rogue Detection and Data Forensics

Agentic systems excel at spotting bad data. They identify patterns that don't fit, statistical anomalies that suggest corruption or fabrication. They detect duplicate entries and timing inconsistencies. When data looks suspicious, agents can quarantine it, down-rank it in decision processes, or flag it for human review.

This extends into security. Agents can communicate findings across networks, building collective intelligence about sensor reliability and detecting systemic fraud or cyber tampering. If multiple agents in different locations see similar anomalies, that's evidence of a coordinated attack rather than random failures.

The Agentic Data Integrity Loop

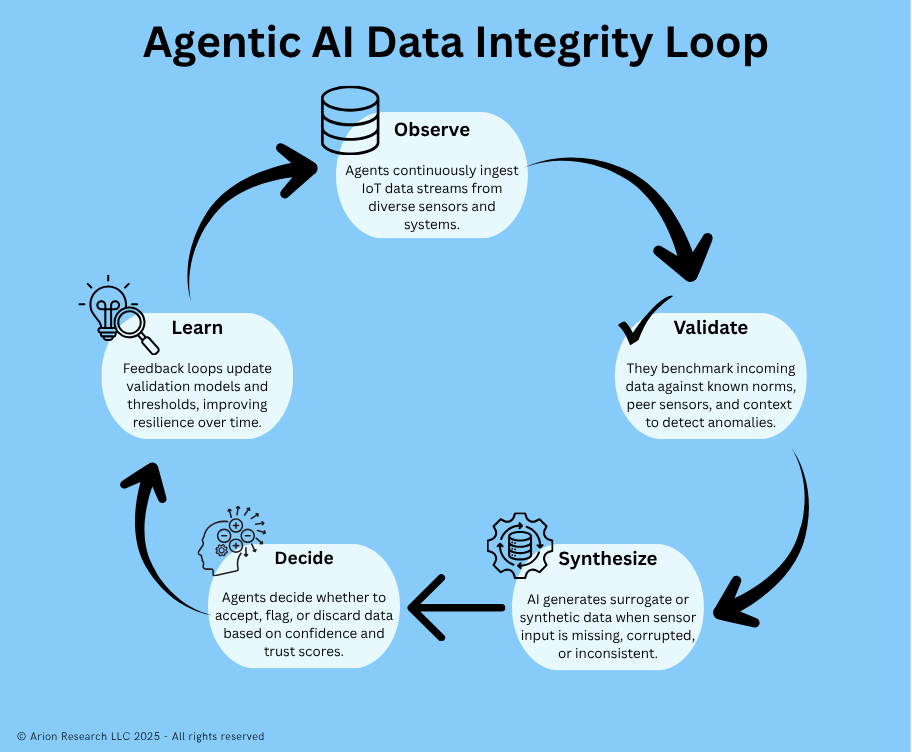

The power of agentic AI comes from its continuous, adaptive approach to data integrity. Think of it as a loop that never stops learning.

Observe: Agents continuously ingest IoT streams, monitoring everything flowing through the network.

Validate: They benchmark incoming data against learned norms, checking it against peer sources and historical patterns.

Synthesize: When inputs fail validation or simply aren't available, they generate surrogate values to fill the gaps.

Decide: Using confidence thresholds, they accept data, flag it for review, or discard it entirely from decision pipelines.

Learn: They update their validation models continuously, getting better at distinguishing good data from bad, normal from anomalous.

This loop creates systems that don't just react to data problems but anticipate and prevent them. The longer these agents run, the smarter they get about what's real and what's not.

Real-World Applications

The applications span every industry that depends on sensor networks.

Smart manufacturing facilities use synthetic sensing to detect faulty machine sensors before they cause downtime. When a sensor fails, surrogate telemetry keeps production lines running while maintenance teams respond. The factory stays online, and the problem gets fixed in the background.

Energy management systems use predictive agents to fill data gaps in distributed grids. Solar installations, wind farms, and battery storage systems don't always report reliably, especially in remote locations. Synthetic sensing estimates their output when direct measurements aren't available, keeping grid management smooth.

Supply chain and logistics operations triangulate data from GPS, environmental sensors, and shipment logs to detect tampering or route anomalies. If a container's location doesn't match its reported temperature, humidity, and timing data, that's a red flag for theft, damage, or fraud.

Healthcare and wearables generate surrogate readings when device sensors are occluded or malfunctioning. A fitness tracker might lose contact with skin, but contextual data from movement patterns and historical readings can estimate heart rate until proper contact resumes.

Challenges and Considerations

This technology isn't without complications.

Trust and transparency matter enormously. Users need to know which data is real and which is synthetic. Systems must clearly mark inferred values and communicate uncertainty levels. Making decisions based on synthetic data without knowing it carries real risks.

Model drift poses another challenge. Synthetic data models are only as good as their training. As systems change and environments evolve, these models need retraining. Otherwise, errors compound and synthetic data diverges further from reality.

Ethics come into play as well. Synthetic data should augment truth, not obscure it. There's a temptation to smooth over problems rather than address them, to hide gaps rather than acknowledge them. Responsible deployment means using surrogate data to maintain operations temporarily while fixing the underlying issues.

Regulatory landscapes are still emerging. Standards for sensor integrity and AI-generated surrogate data don't yet exist in most industries. Organizations deploying these systems today are writing the rules as they go, which creates both opportunity and risk.

The Future: Self-Healing Data Ecosystems

Look ahead five years, and the vision becomes clearer. Agentic AI acts as a digital immune system for IoT networks. Just as your body detects and responds to threats automatically, sensor networks will detect and respond to data integrity issues without human intervention.

Network-level collaboration takes this further. Agents across different organizations and systems could share intelligence about sensor reliability, building collective knowledge about what normal looks like and what patterns indicate problems. A compromised sensor in one factory could trigger alerts in similar facilities around the world.

Integration with blockchain or provenance systems adds another layer, creating verifiable data lineage. Every data point carries a history: where it came from, whether it's real or synthetic, what transformations it underwent, how confident we should be in its accuracy.

The endpoint is fully adaptive, self-auditing sensor networks. Systems that don't just collect data but actively maintain its integrity, healing themselves when problems arise and learning continuously from every incident.

Conclusion

Synthetic sensing turns fragility into resilience. It transforms the weakness of physical sensors into an opportunity for intelligent systems to do what they do best: find patterns, make inferences, and fill in the blanks.

In the agentic enterprise, data doesn't just flow. It thinks for itself. It validates itself. It heals itself when damaged and flags itself when suspicious. The sensors we depend on become more reliable not because the hardware gets better, but because the intelligence surrounding them gets smarter.

The technology exists today. The question is whether your organization will experiment with it before the next outage or attack exposes your blind spots, or after. Smart money is on before.

Start small. Pick one critical system where sensor reliability matters. Deploy agentic validation. Learn what works. Then scale. The alternative is waiting until your sensors lie when it matters most, and by then, it's too late.