The Dual Maturity Framework: Bridging the Gap Between Organizational Readiness and AI Autonomy

“The most common reason AI initiatives fail is not bad technology or insufficient data. It is a mismatch between how autonomous the AI system is designed to be and how prepared the organization is to support that level of autonomy. The Dual Maturity Framework gives leaders a practical diagnostic: assess both dimensions, align them deliberately, and advance them in concert.”

Why One-Dimensional AI Strategy Fails

The conversation around enterprise AI has shifted. For several years, the focus was on generative AI: systems that could summarize documents, draft emails, write code, and answer questions when prompted. These tools delivered real value, but they shared a common limitation. They waited for a human to ask before they did anything. The emerging generation of agentic AI changes that equation entirely. Agentic systems do not just answer; they execute. They plan multi-step workflows, make decisions within defined parameters, coordinate with other systems, and carry out complex tasks with minimal or no human intervention.

This shift from AI-as-assistant to AI-as-worker introduces a new category of strategic risk. When AI only responded to prompts, the consequences of organizational unreadiness were limited to poor adoption or underwhelming productivity gains. When AI acts autonomously, the consequences of unreadiness escalate dramatically: compliance violations, data integrity failures, uncontrolled decision-making, and erosion of stakeholder trust.

Yet many organizations continue to approach agentic AI strategy through a single lens. Some focus exclusively on the technology, racing to deploy the most autonomous agents available. Others fixate on organizational readiness, building governance frameworks and data strategies without a clear picture of what those foundations need to support. Both approaches miss the critical insight: effective agentic AI deployment requires dual maturity, a deliberate alignment between what the technology can do and what the organization can actually absorb.

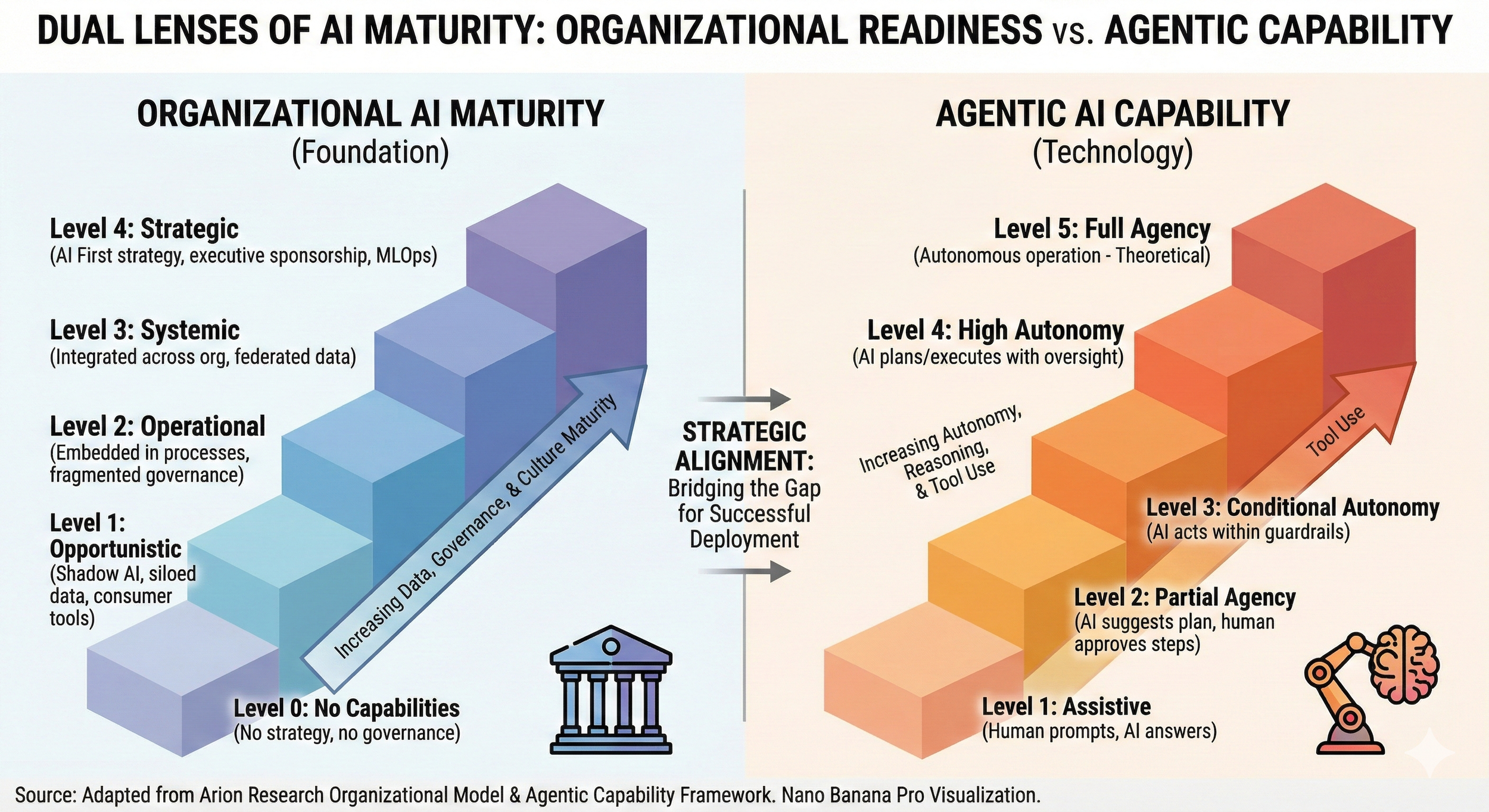

The Dual Maturity Framework introduced in the Senior Executive Guide to AI (Arion Research, 2026) provides a structured approach to this alignment challenge. It evaluates two independent but interdependent dimensions: Organizational AI Maturity and Agentic AI Capability. When these two dimensions are aligned, organizations deploy AI that delivers value reliably and scales safely. When they are misaligned, even the most promising AI initiatives stall or fail.

Organizational AI Maturity: The Foundation

The first dimension of the framework asks a deceptively simple question: What level of AI autonomy can this organization actually handle?

This is not a technology question. It is an assessment of the organizational environment: the quality and accessibility of data, the maturity of governance structures, the depth of leadership commitment, the readiness of the workforce, and the adaptability of the culture. An organization might have access to cutting-edge AI models, but if its data lives in disconnected silos and its governance policies were written for a pre-AI world, that technology will underperform or cause harm.

The framework defines five levels of organizational AI maturity, each describing a distinct stage of readiness.

Level 0: No Capabilities

At this stage, the organization has no formal AI strategy, no governance framework, and no coordinated approach to data management for AI purposes. Data is locked inside operational silos, accessible only to the teams that created it. There is no executive sponsorship for AI initiatives, and the workforce has limited or no AI literacy. Organizations at Level 0 are not prepared for any form of autonomous AI deployment. Even basic AI-assisted tools will struggle without clean, accessible data and some minimal governance structure.

Level 1: Opportunistic

Level 1 organizations have begun experimenting with AI, but the efforts are uncoordinated. Individual teams or departments adopt AI tools on their own initiative, creating what is often called "Shadow AI," a pattern of informal, unsanctioned experimentation. There are no formal AI policies, no centralized oversight, and no consistent approach to data preparation. The experiments may produce localized wins, but they also create risk: ungoverned models making decisions with unvetted data, potential compliance exposures, and duplicated effort across teams.

Level 2: Operational

At Level 2, the organization has moved from ad hoc experimentation to deliberate deployment. AI tools are being used for defined productivity purposes, such as document summarization, customer inquiry routing, or report generation. There is some governance in place, though it tends to be fragmented, with different business units applying different standards. Data quality has improved in the areas where AI is deployed, but enterprise-wide data strategy remains incomplete. Level 2 organizations have demonstrated that AI can work within their environment, but the infrastructure and policies are not yet mature enough to support agents that operate across organizational boundaries.

Level 3: Systemic

Level 3 marks a significant inflection point. AI is no longer confined to individual departments or specific use cases. Instead, it is integrated across organizational boundaries, with agents operating in workflows that span multiple functions. This requires a federated data strategy, where data is governed consistently but accessible across the enterprise. Governance frameworks are more comprehensive, with clear policies around AI decision-making authority, escalation protocols, and monitoring. Cross-functional teams manage AI deployments collaboratively, and the organization has invested in AI literacy across the workforce, not just within technical teams.

Level 4: Strategic

At the highest maturity level, the organization operates with what the framework calls an "AI First" mindset. AI is not a supplement to existing processes; it is a core component of how the organization designs work. Governance is embedded into the AI development lifecycle rather than applied as an afterthought. Executive sponsorship is active and informed, with leadership making resource allocation decisions based on a clear understanding of AI capabilities and limitations. The data infrastructure supports real-time, enterprise-wide access with robust quality controls. The workforce is skilled in collaborating with AI systems, and the culture embraces continuous adaptation. Level 4 organizations are prepared to deploy highly autonomous agents because the organizational scaffolding to support them, monitor them, and intervene when necessary is already in place.

Agentic AI Capability: The Technology Dimension

The second dimension of the framework assesses the technology itself: how much autonomy does the AI system exercise? Not all agentic AI is created equal. The term "agent" encompasses a wide spectrum, from simple assistants that respond to direct commands to sophisticated systems capable of extended independent operation. Understanding where a given AI system falls on this spectrum is essential for matching it to the right organizational context.

The framework defines five levels of agentic AI capability, each describing a distinct degree of autonomous action.

Level 1: Assistive

At the assistive level, the AI system responds to direct human prompts and provides single-turn outputs. It does not take autonomous action. A user asks a question and receives an answer. A user requests a summary and gets one. There is no independent planning, no multi-step execution, and no persistent context between interactions. This is the level at which most current generative AI tools operate. The human remains fully in control of every interaction, and the AI introduces no autonomous decision-making risk.

Level 2: Partial Agency

At Level 2, the AI begins to exhibit agency in a limited, tightly supervised way. It can analyze a situation and propose a plan of action, but a human must approve every individual step before the system proceeds. For example, an AI agent might review a customer support queue, categorize incoming tickets by urgency, and propose a routing plan, but a human operator must confirm each routing decision before it executes. The AI adds value through analysis and recommendation, but the human retains decision authority at every stage.

Level 3: Conditional Autonomy

Level 3 is where the shift toward genuine autonomy becomes tangible. The AI operates independently within defined guardrails, executing tasks and making decisions on its own as long as conditions remain within established parameters. When the system encounters a situation that falls outside those boundaries, it escalates to a human decision-maker. Consider an AI agent managing procurement approvals: it might autonomously approve purchase orders below a defined threshold, from pre-approved vendors, for standard materials, but escalate any request that exceeds the threshold or involves a new vendor. The guardrails define the space in which the agent can act freely, and the escalation protocols define the boundaries of that space.

Level 4: High Autonomy

At Level 4, the AI executes complex, multi-step workflows with minimal human intervention. It can coordinate across systems, adapt its approach based on changing conditions, and handle exceptions within broad operational parameters. Human oversight shifts from real-time supervision to periodic audits and performance reviews. An AI system operating at this level might manage an entire order-to-cash process: receiving orders, checking inventory, coordinating with logistics, generating invoices, and handling routine exceptions, with humans reviewing performance dashboards and intervening only for strategic decisions or unusual situations. This level requires sophisticated monitoring infrastructure because the organization must be able to detect problems that the human operators are no longer watching for in real time.

Level 5: Full Agency

Level 5 describes AI systems capable of extended autonomous operation and self-directed goal-setting. At this level, the AI does not just execute predefined workflows; it identifies opportunities, formulates objectives, and pursues them independently over extended time horizons. It is important to note that Level 5 is currently largely aspirational. While research is advancing toward these capabilities, few production systems operate at this level today, and the governance, trust, and verification frameworks needed to support full agency in enterprise environments are still developing. The framework includes this level to provide a complete picture of the autonomy spectrum and to help organizations plan for where the technology is heading.

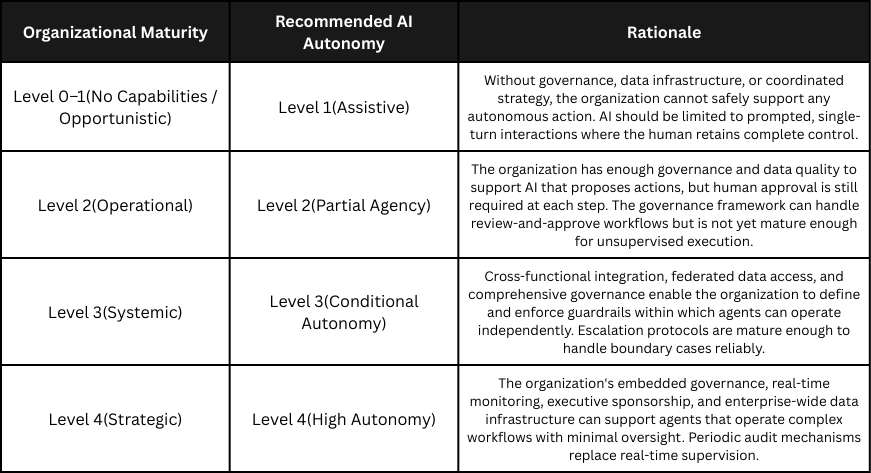

Strategic Alignment: The Matching Matrix

The core value of the Dual Maturity Framework lies not in the individual assessments but in the alignment between them. The Matching Matrix maps organizational maturity levels to appropriate autonomy levels, providing a practical guide for deployment decisions.

The alignment logic is straightforward: the autonomy level of the AI should not exceed the maturity level of the organization deploying it. An organization's ability to govern, monitor, and support an autonomous agent must be commensurate with the degree of independence that agent exercises.

The matrix makes an important point explicit: there is no recommended pairing for Level 5 autonomy. Full agency, with self-directed goal-setting and extended independent operation, requires a level of organizational trust, verification infrastructure, and governance sophistication that does not yet exist at scale in enterprise environments. This is not a failure of ambition; it is an honest assessment of where both technology and organizational practice stand today.

The Consequences of Misalignment

Understanding the alignment framework also means understanding what happens when alignment breaks down. The framework identifies two distinct failure modes, each with different risk profiles and organizational consequences.

Overshooting: The High-Risk Zone

Overshooting occurs when an organization deploys AI agents with autonomy levels that exceed its organizational maturity. The classic example is a Level 1 organization, one with uncoordinated experimentation, no formal governance, and siloed data, deploying Level 4 agents that execute complex workflows with minimal human oversight.

The consequences are predictable and severe. Without mature governance, the agents operate without clear boundaries, making decisions that no one has defined the authority for. Without integrated data infrastructure, the agents work with incomplete or inconsistent information, producing outputs that appear confident but are built on unreliable foundations. Without monitoring infrastructure, problems compound before anyone detects them.

Overshooting failures tend to be dramatic and visible: a compliance violation triggered by an unsupervised agent, a customer-facing decision made with bad data, a cascade of automated actions that no one can explain or reverse. These failures erode trust, both internally and externally, and often lead to reactive policy responses that overcorrect, shutting down AI initiatives entirely rather than recalibrating them.

Undershooting: The Lost-Value Zone

Undershooting is the opposite pattern: a mature organization using AI well below its capability. A Level 4 organization, one with embedded governance, enterprise-wide data infrastructure, and active executive sponsorship, that deploys only Level 1 assistive tools is leaving enormous value on the table.

Undershooting failures are less dramatic but equally damaging over time. The organization has invested in building the infrastructure, governance, and culture to support autonomous operations, but it is not capturing the return on that investment. Competitors who have achieved similar maturity but deployed more autonomous agents gain efficiency, speed, and scale advantages. Knowledge workers remain burdened with tasks that agents could handle, reducing capacity for higher-value work. The organization's AI investment yields incremental productivity improvements rather than the operational transformation it was designed to enable.

Undershooting is particularly insidious because it does not produce visible crises. Instead, it manifests as a slow erosion of competitive position, a gap between what the organization could achieve and what it actually delivers. By the time the gap becomes apparent, the window for catching up may have narrowed considerably.

A Multi-Year Journey: Building Dual Maturity

The Dual Maturity Framework is not a one-time assessment. It is a strategic planning tool for what will inevitably be a multi-year journey. Organizational maturity cannot be accelerated past certain thresholds any more than a building's foundation can be poured and cured in an afternoon. Moving from Level 1 to Level 3 organizational maturity typically requires 18 to 36 months of sustained investment in data infrastructure, governance frameworks, workforce development, and cultural change.

The temptation to skip steps is real, especially when competitive pressure intensifies or when a particularly compelling AI capability becomes available. But the framework's central lesson holds: deploying autonomy that outpaces organizational readiness does not accelerate progress. It creates risk, erodes trust, and often forces organizations to retreat to lower autonomy levels to recover.

The most effective approach is to advance both dimensions in concert. As the organization builds data infrastructure, it deploys agents that can use that data within appropriate governance boundaries. As governance matures, the autonomy of those agents expands to match. As the workforce develops AI collaboration skills, the agents take on more complex tasks that leverage those skills.

This coordinated progression moves the organization from using AI as a collection of digital tools to building what can genuinely be called a digital workforce: AI agents that operate as trusted participants in organizational workflows, governed by mature policies, monitored by robust infrastructure, and supported by a culture that understands both the potential and the limitations of autonomous systems.

The destination is not a specific maturity level. It is the ongoing discipline of alignment itself: continuously assessing both dimensions, adjusting deployment decisions as both the technology and the organization evolve, and maintaining the honest self-awareness to recognize when ambition is outpacing readiness. Organizations that build this discipline will not just deploy agentic AI successfully. They will build the adaptive capacity to absorb whatever the next wave of AI capability demands.