Principles of Agentic AI Governance in 2025: Key Frameworks and Why They Matter Now

The year 2025 marks a critical transition from AI systems that merely assist to those that act with differing levels of autonomy. Across industries, organizations are deploying AI agents capable of making complex decisions without direct human intervention, executing multi-step plans, and collaborating with other agents in sophisticated networks.

This shift from assistive to agentic AI brings with it a new level of capability and complexity. Unlike traditional machine learning systems that operate within narrow, predictable parameters, today's AI agents demonstrate dynamic tool use, adaptive reasoning, and the ability to navigate ambiguous situations with minimal guidance. They're managing supply chains, conducting financial trades, coordinating healthcare protocols, and making decisions that ripple through entire organizations.

The regulatory landscape is evolving rapidly to keep pace. The EU AI Act has established comprehensive requirements for autonomous systems, while the United States is implementing sector-specific AI safety guidelines across finance, healthcare, and defense. Meanwhile, international standards bodies like ISO/IEC are developing governance frameworks that will shape how organizations worldwide approach AI deployment.

The urgency is clear: organizations that establish robust governance frameworks now will gain significant competitive advantages, while those that delay face mounting risks and potential regulatory penalties.

Understanding Agentic AI in 2025

Defining the New Generation

Agentic AI systems differ substantially from their predecessors. Where traditional AI focuses on pattern recognition or single-task automation, agentic AI can operate with genuine autonomy. These systems can execute complex tasks end-to-end, develop multi-step plans to achieve objectives, and adapt their approaches based on changing circumstances.

Agentic AI includes autonomous task execution without constant human oversight, sophisticated planning and reasoning capabilities that span multiple decision points, and dynamic tool use that allows agents to select and employ different resources as situations demand. These capabilities enable AI agents to operate more like autonomous team members than simple software tools.

The Governance Challenge

This new level of autonomy creates unprecedented governance challenges. Decision-making chains in agentic systems often involve dozens or hundreds of micro-decisions, making it difficult to understand how agents arrive at their conclusions. When multiple agents collaborate, the complexity multiplies exponentially as they negotiate, delegate, and coordinate actions in ways that can be opaque even to their creators.

The risk surface has expanded dramatically. Beyond traditional concerns about algorithmic bias or data privacy, agentic AI introduces risks around cybersecurity vulnerabilities, sophisticated misinformation campaigns, and potential misuse of autonomous capabilities. These systems can compound errors rapidly and in unexpected ways, making robust governance not just advisable but essential.

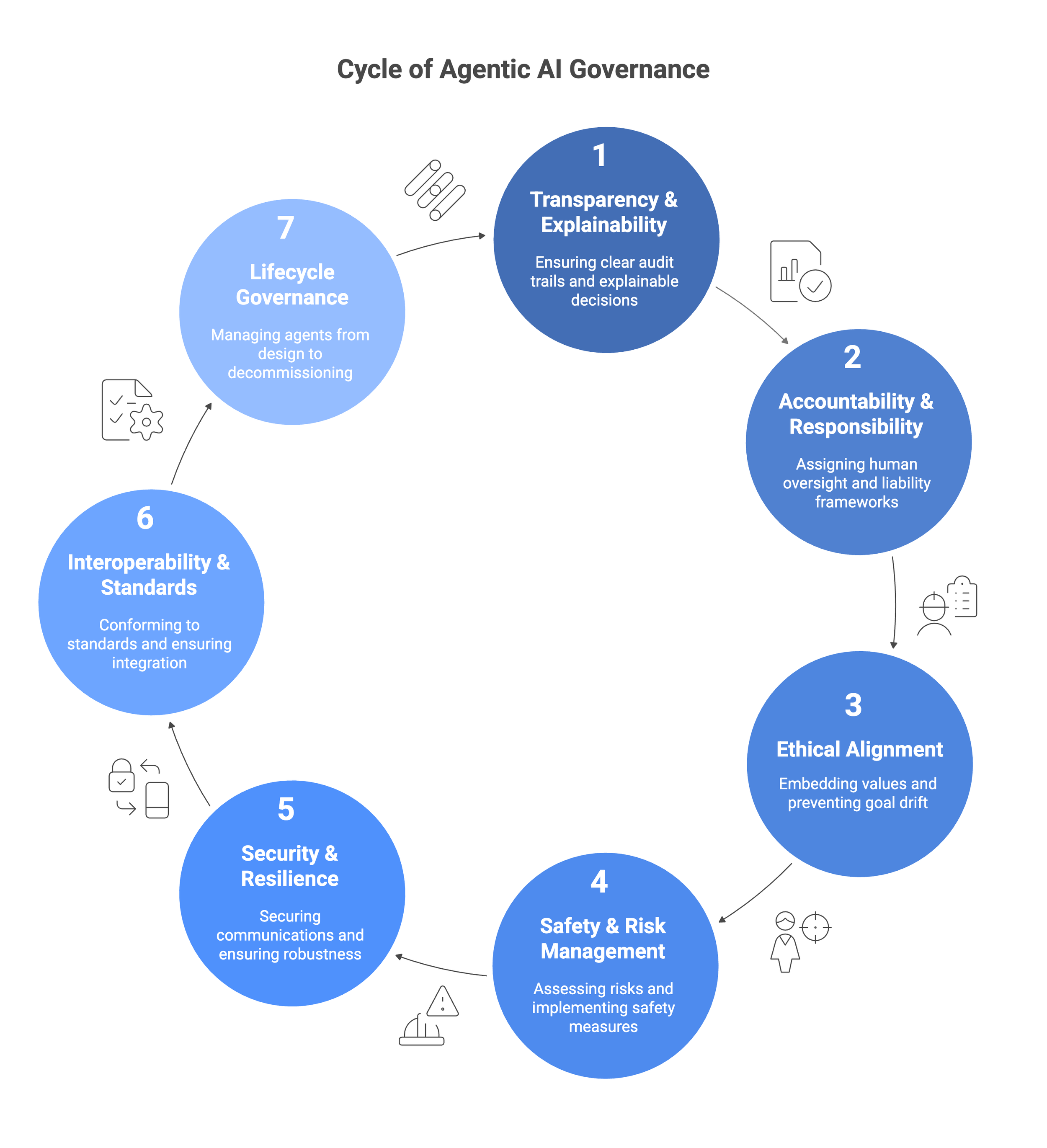

Core Principles of Agentic AI Governance

1. Transparency and Explainability

Modern AI governance demands clear visibility into agent decision-making processes. Organizations must establish comprehensive audit trails that document not just what agents did, but why they made specific choices. This requires new approaches to explainable AI that can handle the complexity of multi-step planning and inter-agent communication.

Practical implementations include detailed model cards for each agent that document capabilities, limitations, and decision-making frameworks. Decision provenance logs should track the reasoning chain for significant actions, while monitoring systems must capture inter-agent communications and collaborative decision points. These transparency measures build trust and enable meaningful oversight.

2. Accountability and Responsibility Mapping

Autonomous operations require clear assignment of human oversight roles and responsibility. Organizations must establish frameworks that define when humans should intervene, who has authority to override agent decisions, and how liability applies when agents make errors or cause harm.

Effective accountability structures include designated human supervisors for critical agent operations, clear escalation protocols for exceptional situations, and well-defined liability frameworks that account for the autonomous nature of agent actions. These frameworks must balance the efficiency benefits of autonomy with the need for meaningful human oversight.

3. Ethical Alignment and Value Embedding

AI agents must operate within ethical constraints that reflect human values and societal norms. This goes beyond simple rule-following to include nuanced understanding of context, stakeholder impacts, and competing priorities. Organizations must actively embed ethical considerations into agent objectives and continuously monitor for goal drift or unintended behaviors.

Implementation requires comprehensive alignment testing before deployment, ongoing monitoring for value drift, and mechanisms to update ethical constraints as situations evolve. Agents should be designed to recognize ethical dilemmas and escalate decisions when facing conflicting values or unclear ethical implications.

4. Safety and Risk Management

Dynamic environments require continuous risk assessment and adaptive safety measures. Traditional safety approaches that rely on static testing and predefined scenarios are insufficient for agents that must navigate novel situations and adapt their behavior over time.

Effective safety frameworks include continuous risk monitoring systems, sandboxed deployment environments for testing new capabilities, and robust fail-safe mechanisms including kill switches and behavior throttling. Organizations should implement staged deployment processes that gradually increase agent autonomy as safety confidence grows.

5. Security and Resilience

The interconnected nature of agentic AI systems creates new attack vectors and vulnerabilities. Securing communications between agents and protecting against adversarial manipulation requires sophisticated security architectures and constant vigilance.

Security measures must cover agent-to-agent communications using protocols like Model Context Protocol (MCP), robust encryption for sensitive data exchanges, and adversarial testing to identify manipulation vulnerabilities. Disaster recovery protocols should account for scenarios where agents themselves may be compromised or unavailable.

6. Interoperability and Standards Compliance

As agentic AI systems proliferate, interoperability becomes crucial for both functionality and governance. Organizations need vendor-neutral frameworks, like the Agent2Agent Protocol (A2A), that enable different agent systems to work together while maintaining security and oversight capabilities.

This requires conformance to emerging open standards for agent communication and integration, participation in industry-wide governance initiatives, and alignment with regulatory requirements from bodies like IEEE, ISO/IEC, and NIST. Standards compliance should be viewed as an enabler of innovation rather than a constraint.

7. Lifecycle Governance

Effective governance spans the entire agent lifecycle, from initial design through eventual decommissioning. This comprehensive approach prevents gaps that could create security vulnerabilities or compliance issues.

Lifecycle governance includes robust change management processes for agent updates, learning cycle oversight to prevent undesirable behavioral drift, and clear decommissioning protocols that prevent orphaned autonomous processes from continuing to operate without oversight.

Key Governance Frameworks Emerging in 2025

Regulatory-Driven Frameworks

Regulatory requirements are driving significant changes in how organizations approach AI governance. The EU AI Act establishes comprehensive requirements for autonomous systems, including mandatory risk assessments, transparency obligations, and human oversight requirements. These regulations are pushing organizations to develop more rigorous governance processes and documentation standards.

In the United States, sector-specific guidelines are emerging across industries. Financial services face requirements around algorithmic trading and automated decision-making, healthcare organizations must comply with patient safety and privacy regulations, and defense contractors are implementing security-focused governance frameworks. These sector-specific approaches allow for more tailored governance while maintaining consistent core principles.

Industry-Led Frameworks

Industry organizations are developing collaborative governance standards that go beyond regulatory minimums. The Partnership on AI has published guidelines specifically addressing agentic AI deployment, while IEEE is developing comprehensive ethics standards for autonomous systems. These industry-led initiatives often provide more practical guidance than regulatory frameworks.

Professional associations are creating certification programs for AI governance practitioners, establishing communities of practice around emerging challenges, and developing shared resources for common governance tasks. This collaborative approach helps organizations learn from each other's experiences and avoid duplicating effort.

Enterprise AI Governance Models

Forward-thinking organizations are establishing dedicated governance structures for agentic AI. Agent Governance Boards bring together technical experts, business leaders, and ethics specialists to oversee autonomous system deployment. These boards provide strategic guidance while ensuring that governance remains aligned with business objectives.

Hybrid governance models are emerging that combine traditional AI ethics councils with operational AI Ops teams. This approach ensures that governance principles translate into practical operational procedures while maintaining the strategic oversight necessary for complex autonomous systems.

Technical Protocol-Based Governance

Technical standards are enabling new approaches to governance through standardized protocols and interfaces. The Model Context Protocol (MCP) provides traceable external interactions that support audit and oversight requirements. Standardized agent capability registries help organizations understand what their agents can do and how they interact with other systems.

These technical approaches to governance offer the advantage of being built into the systems themselves rather than layered on top, making compliance more efficient and reducing the risk of governance gaps.

Why Governance Matters Now

Trust as a Market Differentiator

In an increasingly AI-driven market, trust becomes a crucial competitive advantage. Customers, partners, and stakeholders are demanding assurances about how AI systems make decisions that affect them. Organizations with robust governance frameworks can provide these assurances, winning business and partnerships that their competitors cannot secure.

This trust advantage extends beyond immediate business relationships. Investors are increasingly evaluating AI governance as part of due diligence, regulators are scrutinizing organizations with poor governance practices, and talent increasingly wants to work for organizations with strong ethical frameworks.

Regulatory Readiness

Early investment in governance frameworks avoids costly retrofitting as regulations evolve. Organizations that wait until regulations are finalized often find themselves scrambling to implement governance processes under pressure, leading to suboptimal solutions and higher costs.

Proactive governance also positions organizations to influence regulatory development. Regulators often look to industry leaders for guidance on practical implementation approaches, giving well-governed organizations a voice in shaping future requirements.

Risk Containment

High-profile AI failures can cause lasting damage to organizational reputation and market position. Robust governance frameworks help prevent these failures by identifying and addressing risks before they materialize. The cost of prevention is almost always lower than the cost of remediation after a major incident.

Risk containment benefits extend beyond avoiding negative outcomes. Well-governed AI systems operate more reliably, enabling organizations to deploy autonomous capabilities with confidence and achieve the full benefits of their AI investments.

Scalability as an Enabler

Strong governance frameworks actually enable greater AI deployment by providing the confidence and structure necessary to scale autonomous operations. Organizations with robust governance can deploy AI agents more widely and with greater autonomy because they have the oversight and control mechanisms necessary to manage the associated risks.

This scalability advantage becomes more important as AI capabilities continue to advance. Organizations that establish governance frameworks now will be positioned to take advantage of future AI innovations, while those without governance structures may find themselves unable to safely deploy more advanced capabilities.

Building a Governance Roadmap for Agentic AI

Assessment Phase

Organizations should begin by conducting comprehensive assessments of their current AI governance capabilities and identifying gaps specific to agentic AI. This assessment should cover existing policies, technical infrastructure, organizational structures, and compliance requirements.

The assessment should identify current AI deployments that might qualify as agentic, evaluate existing governance processes for their applicability to autonomous systems, and benchmark against industry best practices and regulatory requirements. This baseline assessment provides the foundation for developing targeted improvement plans.

Policy Design

The next phase involves mapping governance principles to specific operational practices. Organizations should develop policies that address each of the core governance principles while remaining practical and achievable within their specific context.

Policy design should involve stakeholders from across the organization, including technical teams, business leaders, legal and compliance professionals, and ethics specialists. The resulting policies should provide clear guidance for day-to-day operations while maintaining flexibility to adapt as AI capabilities evolve.

Implementation

Governance policies must be integrated into existing AI Ops and DevOps pipelines to ensure they become part of standard operations rather than an additional burden. This integration requires careful attention to workflow design, tool selection, and training programs.

Implementation should be phased to allow for learning and adjustment. Organizations should start with lower-risk deployments to test governance processes before applying them to more critical or complex agent systems. This approach allows for iterative improvement while minimizing disruption.

Monitoring and Iteration

Governance frameworks must evolve continuously as AI capabilities advance and organizational needs change. Regular audits should assess the effectiveness of governance processes, identify emerging risks, and evaluate compliance with evolving regulatory requirements.

Feedback loops should capture input from all stakeholders, including technical teams implementing governance requirements, business leaders depending on AI capabilities, and external partners affected by AI decisions. This comprehensive feedback enables continuous improvement and adaptation.

Conclusion

The transition to agentic AI brings unprecedented opportunities and challenges. Organizations that establish robust governance frameworks now will gain significant competitive advantages through enhanced trust, regulatory readiness, effective risk management, and the ability to scale AI deployments safely.

The frameworks and principles outlined here provide a roadmap for building effective agentic AI governance, but implementation must be tailored to each organization's specific context and needs. The key is to start now, with the understanding that governance frameworks will evolve as AI capabilities advance.

For leaders considering their next steps, the choice is clear: establish or refine your agentic AI governance frameworks immediately. The organizations that act decisively now will shape the future of autonomous AI deployment, while those that delay will find themselves responding to standards and practices established by their more proactive competitors.

Looking ahead, agentic AI governance will continue evolving as a distinct discipline, requiring specialized expertise and dedicated resources. Organizations that invest in building this capability now will be positioned to lead in an increasingly autonomous future.