Governance by Design: Embedding Ethical Guardrails Directly into Agentic AI Architectures

As artificial intelligence systems gain increasing levels of autonomy, the traditional approach of adding compliance measures after deployment is proving inadequate. We need a new approach: Governance by Design; a proactive methodology that weaves ethical guardrails directly into the fabric of AI architectures from the ground up.

Why Governance Must Be Built In

Governance by Design is a critical response to the limitations of reactive oversight. Unlike traditional compliance frameworks that layer rules onto existing systems, this approach integrates ethical principles as core architectural components, ensuring responsible behavior is native rather than retrofitted.

Agentic AI systems can operate with autonomy, making decisions and taking actions at scales and speeds that far exceed human oversight capabilities. When ethical considerations are treated as afterthoughts, risks compound exponentially. A trading algorithm can execute thousands of transactions before human intervention becomes possible. A customer service agent can interact with millions of users, each conversation carrying potential for harm or benefit.

Traditional compliance approaches; characterized by periodic audits, external monitoring, and post-incident corrections, cannot keep pace with autonomous systems. These reactive measures create a dangerous gap between AI capabilities and ethical safeguards, leaving organizations vulnerable to unintended consequences that can damage trust, violate regulations, and cause real harm.

The Core Principles of Governance by Design

Effective governance architecture has five interconnected principles that must be embedded throughout the system design.

Transparency ensures visibility into decision paths and agent behaviors. This goes beyond simple logging to include interpretable decision processes that stakeholders can understand and audit. Every action taken by an autonomous agent should be traceable back to its reasoning process, data inputs, and applicable policies.

Accountability creates clear traceability from actions back to policies, rules, and responsible stakeholders. This principle demands robust attribution mechanisms that can identify not just what happened, but why it happened and who bears responsibility for the outcome. It requires systems that can connect autonomous decisions to human-defined objectives and constraints.

Fairness embeds bias detection and mitigation directly into model training and decision-making processes. Rather than treating fairness as a post-processing concern, this principle requires continuous monitoring for discriminatory patterns and automated correction mechanisms that activate before biased decisions reach affected parties.

Safety and Security builds resilience against adversarial manipulation and unintended actions into the system's core architecture. This includes both cybersecurity measures to protect against external threats and internal safeguards to prevent the system from causing harm through unexpected behavior or goal misalignment.

Human-in-the-loop Thresholds establish clear escalation protocols that activate when risk or ambiguity crosses predefined boundaries. These thresholds must be carefully calibrated to preserve system efficiency while ensuring human oversight for high-stakes decisions.

Where Ethical Guardrails Belong in the Architecture

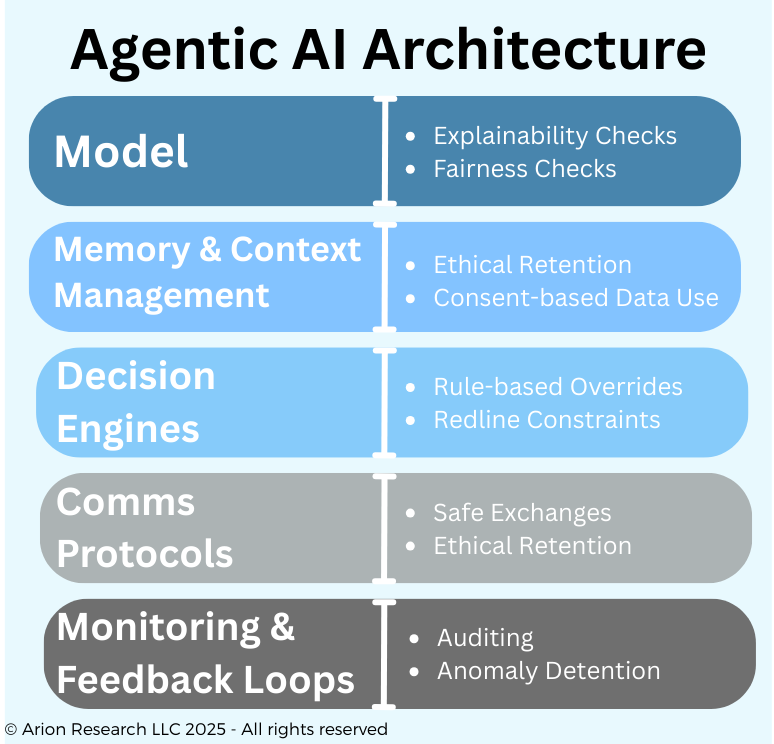

Implementing Governance by Design requires strategic placement of ethical controls throughout the AI system architecture. Each layer serves a specific role in ensuring responsible autonomous behavior.

The Model Layer forms the foundation, where explainability and fairness checks must be embedded directly into the neural networks and algorithms that drive decision-making. This includes techniques like attention mechanisms that highlight which inputs drive specific outputs, fairness constraints that prevent discriminatory patterns from forming during training, and interpretability modules that can generate human-readable explanations for model behavior.

Memory and Context Management systems control how agents retain, access, and forget information, ensuring ethical data handling practices are automatic rather than optional. This layer implements consent-based data use policies, automated data retention schedules, and privacy-preserving techniques that protect sensitive information while maintaining system functionality.

Decision Engines house rule-based overrides, constraint enforcement mechanisms, and escalation triggers that can halt or redirect autonomous actions when they conflict with ethical guidelines. These systems must balance flexibility with firm boundaries, allowing innovation within safe parameters while preventing harmful outcomes.

Communication Protocols govern how agents interact with each other, with humans, and with external systems, ensuring all exchanges adhere to ethical standards. This includes preventing the spread of misinformation between agents, maintaining appropriate boundaries in human interactions, and securing sensitive data during inter-system communications.

Monitoring and Feedback Loops create continuous governance signals through auditing mechanisms, anomaly detection systems, and performance metrics that track ethical compliance in real-time. These systems must be designed for early warning rather than post-incident analysis, enabling proactive intervention before problems escalate.

Practical Design Patterns for Ethical Guardrails

Successful implementation requires proven architectural patterns that translate ethical principles into working code and system behaviors.

Policy-as-Code transforms organizational ethics and regulatory requirements into executable rules that govern agent behavior automatically. This pattern treats ethical guidelines not as vague aspirations but as precise specifications that can be version-controlled, tested, and deployed like any other software component.

Sandboxed Autonomy provides agents with operational freedom within carefully defined boundaries, similar to how operating systems isolate applications to prevent system-wide damage. Agents can innovate and adapt within their sandbox while being unable to violate core ethical constraints or access restricted resources.

Adaptive Oversight scales governance mechanisms proportionally to the complexity and autonomy level of different agents and decisions. Low-risk, routine actions operate with minimal oversight, while high-stakes decisions trigger enhanced monitoring and approval processes.

Multi-Agent Checks and Balances deploy specialized monitoring agents that observe and evaluate the ethical compliance of operational agents, creating a distributed system of peer review and accountability. This pattern prevents the concentration of power in any single autonomous system while maintaining efficiency.

Dynamic Guardrails enable the evolution of ethical rules as regulations change, social norms shift, and organizational values develop. These systems can update their behavioral constraints without requiring complete redeployment, ensuring continued compliance in changing environments.

Real-World Scenarios

The practical value of Governance by Design becomes clear through specific industry applications where autonomous agents must navigate complex ethical landscapes.

In Financial Services, autonomous trading agents pose significant risks if left unchecked. Governance by Design prevents these systems from exploiting regulatory loopholes, manipulating markets, or making decisions that favor short-term profits over long-term stability. Embedded guardrails ensure compliance with trading regulations, prevent conflicts of interest, and maintain market fairness even when operating at superhuman speeds.

Healthcare applications demand the highest ethical standards, where autonomous diagnostic and treatment recommendation systems must protect patient privacy while avoiding harmful medical advice. Governance by Design ensures these systems cannot access unauthorized patient data, always consider patient safety as the primary objective, and escalate complex cases to human medical professionals when appropriate.

Supply Chain automation increasingly relies on autonomous procurement and logistics agents that must enforce sustainable and ethical sourcing standards. These systems can evaluate supplier practices, detect potential human rights violations, and prioritize environmentally responsible options while maintaining cost efficiency and delivery requirements.

Customer Service agents interact with millions of users daily, creating vast potential for both positive and negative impacts. Governance by Design prevents these systems from spreading misinformation, engaging in manipulative personalization tactics, or discriminating against certain customer groups while maintaining helpful and efficient service delivery.

Measuring Success: Metrics for Governance by Design

Effective governance requires measurable outcomes that demonstrate both ethical compliance and system performance. Organizations must track multiple dimensions of success to ensure their Governance by Design implementation achieves its intended goals.

The ratio of policy violations detected versus prevented provides insight into the proactive effectiveness of embedded guardrails. A mature system should prevent far more violations than it detects after the fact, indicating that ethical constraints are working as designed rather than merely documenting failures.

Transparency scores measure the auditability and explainability of autonomous decisions across different system components and decision types. These metrics should track not just whether explanations are available, but whether they are accurate, useful, and accessible to relevant stakeholders.

Escalation frequency and appropriateness indicate whether human-in-the-loop thresholds are calibrated correctly. Too many escalations suggest overly conservative settings that limit efficiency, while too few may indicate blind spots in risk assessment.

Fairness indicators track bias audits across different types of decisions and affected populations. These metrics must be sensitive to intersectional discrimination and capable of detecting subtle forms of bias that may not be immediately apparent.

Trust signals from customers, regulators, and employees provide external validation of governance effectiveness. These qualitative measures complement quantitative metrics by capturing the human impact of ethical AI deployment.

Challenges and Tradeoffs

Implementing Governance by Design requires navigating several inherent tensions and practical challenges that organizations must address thoughtfully.

Balancing agility versus control creates ongoing tension in fast-evolving AI systems. Embedded guardrails can slow development and deployment cycles, potentially hampering an organization's competitive position. However, the cost of post-deployment ethical failures often far exceeds the investment in proactive governance, making this a strategic rather than purely technical decision.

The economics of embedding governance early versus retrofitting later present complex cost-benefit calculations. While upfront investment in governance architecture requires significant resources, retrofitting ethical controls onto existing systems typically costs far more and achieves inferior results. Organizations must weigh immediate budget pressures against long-term risk mitigation.

Global ethical diversity complicates the definition of universal fairness standards. What constitutes ethical behavior varies significantly across cultures, legal systems, and social contexts. Governance by Design must accommodate this diversity while maintaining coherent operational principles, often requiring region-specific customization of ethical rules.

Over-constraining agents poses the risk of limiting innovation and reducing system effectiveness. Overly restrictive guardrails can prevent beneficial emergent behaviors and novel problem-solving approaches. The challenge lies in defining boundaries that ensure safety without stifling the creative potential of autonomous systems.

Roadmap to Implementation

Organizations can adopt Governance by Design through a structured approach that builds ethical capabilities incrementally while maintaining operational continuity.

Step 1 define ethical standards that align corporate values with regulatory requirements and stakeholder expectations. This process requires cross-functional collaboration to translate abstract principles into specific, actionable guidelines that can inform technical implementation.

Step 2 transform these principles into enforceable design rules through policy-as-code methodologies. Technical teams must work closely with legal, ethics, and compliance professionals to create machine-readable specifications that capture the nuance of human ethical judgment.

Step 3 embed guardrails across the complete agent lifecycle, from initial training through deployment and ongoing operation. This requires architectural changes to existing systems and the development of new governance infrastructure components.

Step 4 establish continuous monitoring and governance evolution capabilities that can adapt to changing requirements and emerging risks. Organizations must build processes for updating ethical rules, monitoring their effectiveness, and improving governance mechanisms based on real-world performance.

Step 5 create cross-functional governance councils that bring together expertise from IT, legal, ethics, and compliance domains. These bodies provide ongoing oversight and strategic direction for governance architecture evolution.

The Future of Trustworthy Autonomy

The trajectory toward widespread adoption of autonomous AI systems depends on establishing trustworthy governance frameworks. Organizations that treat governance as an enabler of trust, innovation, and adoption rather than a constraint will gain significant competitive advantages in the emerging digital workforce era.

Governance by Design transforms ethical compliance from a burden into a strategic capability. When ethical guardrails are native to AI architectures rather than bolted-on additions, they enable greater autonomy with confidence, faster regulatory approval, and stronger stakeholder trust. This approach allows organizations to realize the full potential of AI while managing associated risks responsibly.

The future belongs to organizations that recognize governance as native to AI systems rather than external to them. Agentic AI will only achieve its transformative potential when ethical considerations are as embedded and automatic as computational processes themselves.

The time for half-measures has passed. Organizations should embrace Governance by Design as a core capability for the digital workforce era, treating it not as a compliance checkbox but as the foundation for trustworthy autonomy at scale. The choice is not whether to implement governance, but whether to do so proactively through design or reactively through crisis response. The organizations that choose wisely will define the future of ethical AI deployment.