Generative AI Adoption

I’ve just finished collecting responses on an AI adoption survey this week, and started looking at some of the preliminary data today. The survey has 402 completes with the following demographics:

North America

Employed full or part time

Over 18 years old

Involved in their organizations AI initiatives in one of 4 ways -

A user of AI tools and solutions

Leader of AI initiatives

On a team exploring and / or implementing AI

An influencer on AI strategy

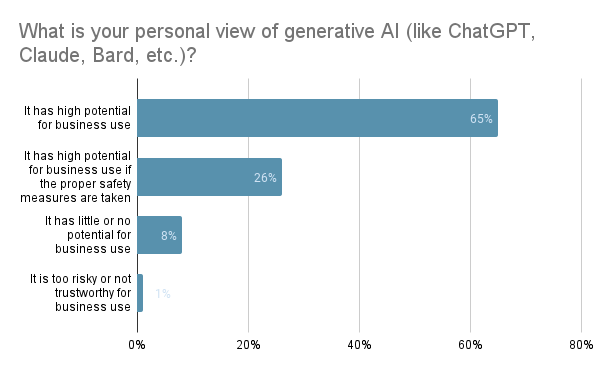

The report will take a broad look at AI adoption across a number of topics, but in this post we’ll take a look at the preliminary data on generative AI adoption. Look for the full research report mid-August on arionresearch.com/research-reports. Since last fall there has been a rapidly growing obsession with generative AI and particularly OpenAI’s ChatGPT. There are a number of open and closed large language models (LLM) from a variety of startups gaining users at a whirlwind pace, as well as several cloud providers offering open integrations and LLMs of their own. The buzz on generative AI started mostly positive but as with most technology there quickly emerges a darker side as well. For generative AI this ranges from the capability to generate overwhelming misinformation campaigns to security and privacy challenges. To better understand the respondent's’ personal view of generative AI:

© Arion Research LLC 2023 - All rights reserved

As you can see from the chart above, the opinion of the majority of respondents is very positive and optimistic. Considering some of the negative information that is being discussed lately it is perhaps a little surprising that the response “It has potential for business use if the proper safety measures are taken” was only about one in four respondents. The extremely low response to the “risky or not trustworthy for business use” answer is likely due to the strong affinity and optimism for AI in the respondent population, which was targeted at individuals that are involved in AI in their current organization by either shaping strategy or using AI enabled tools. I’d expect that it would be much higher if the survey included a broader representation of the general population.

The use of generative AI enabled solutions in business is growing at breakneck speed. The following chart gives some indication of just how broad the use of generative AI has spread:

© Arion Research LLC 2023 - All rights reserved

Nearly three quarters of the respondent organizations are already using generative AI solutions, although 27% are just at the pilot phase at present. On top of those already using generative AI, another one in four have plans to implement a solution within 12 months. Among respondents planning, implementing and / or using AI enabled tools, generative AI has a lot of momentum. In another question on the survey we asked “What is your organization's primary reason for using or planning to use AI?”. The three leading use cases were “improve operational efficiency” (30%), “cybersecurity” (24%) and “improve customer experience (CX)” (17%). All these use cases have many ways to include generative AI in the solutions. Improving operational efficiency and CX have use cases that range from providing virtual assistants to intelligent chatbots.

Generative AI in Cybersecurity

Generative AI can be a powerful tool in cybersecurity across a range of applications. Here are several use cases:

Threat Intelligence: generate possible cybersecurity threat scenarios. By understanding the characteristics of past threats, AI can generate models of possible future threats, aiding in proactive cybersecurity.

Penetration Testing: conduct penetration testing, automatically generating and executing test cases. This will ensure a more secure and robust system by constantly checking for vulnerabilities.

Phishing Detection: create models that can understand the patterns of phishing emails and websites, thereby generating phishing instances for training the detection systems.

Malware Analysis: by understanding the structure of existing malware, generative models can create examples of potential future malware. This can help cybersecurity teams make more effective preparations and come up with new strategies to tackle these new types of threats.

Security Awareness Training: generate realistic phishing attempts, social engineering scenarios, and other threat situations for security training purposes. It's a way to keep staff updated about the evolving tactics used by cybercriminals.

Digital Identities: for the purpose of testing, generative AI can create entire digital identities, complete with behavior patterns. This could be useful in setting up honeypots, decoy systems meant to lure attackers and study their methods.

False Flag Operations: In some situations, it can be beneficial for an organization to create false flag operations that confuse or misdirect attackers.

User Behavior simulation: simulate user behavior, which can be analyzed to identify normal behavior patterns. Any deviation from these patterns could be a sign of a cyberattack.

Password Cracking and Strengthening: help generate possible password combinations to aid in penetration testing, as well as to create stronger passwords that are harder to crack.

Data Augmentation: In situations where there's limited cybersecurity data for training detection algorithms, generative AI can help generate synthetic data that expands the training set and potentially improves detection capabilities.

Note though, that while generative AI offers several advantages in cybersecurity, it can also be used by adversaries for malicious purposes, such as creating more sophisticated phishing emails or malware. This underlines the need for continued advancements in cybersecurity defenses.

Open or Closed LLMs

© Arion Research LLC 2023 - All rights reserved

Just over half of respondents are using both closed and open LLMs. Another third are using mainly open LLMs. It’s worth a quick comparison of both:

LLMs like OpenAIs ChatGPT-4 can be considered as either open or closed systems, and these classifications mostly relate to how they interact with external data sources and the extent to which they can learn from new information post-training.

Open LLMs

Learning Capability: Open systems are capable of continuous learning. They can access and learn from new data after their initial training phase.

Data Access: They often have the ability to access real-time data from the internet or other external data sources. This allows them to provide information or generate responses that are up-to-date.

Accuracy of Information: Given the real-time data access, their responses may be more accurate when it comes to current events, trends, or new knowledge.

Data Privacy and Security: Open systems could potentially pose a greater risk in terms of data privacy and security since they pull information from various external sources. The handling and storing of data would need to be monitored very closely.

Ethical and Bias Concerns: With continuous learning, there is also an increased risk of the model learning and propagating biases present in the new data it encounters.

Closed LLMs

Learning Capability: Closed systems do not have the ability to learn after their initial training phase. Their responses are based solely on the data they were trained on.

Data Access: They cannot access real-time data from the internet or other external sources. Therefore, their knowledge is limited to the information available up to the point of their last training.

Accuracy of Information: Given their lack of real-time data access, their responses may be outdated when it comes to recent developments or knowledge.

Data Privacy and Security: Closed systems can have a higher level of data privacy and security because they do not need to pull in or interact with new data.

Ethical and Bias Concerns: While biases can be present in closed systems based on their initial training data, there's less risk of them acquiring new biases as they do not continuously learn from new data.

The choice between open and closed LLMs depends largely on the specific requirements of the use case, taking into account factors like the need for up-to-date information, data privacy and security concerns, and the capacity to manage and mitigate biases.

Spending

Considering the current buzz around generative AI, it’s not really surprising that 87% of the respondents are planning on increasing spending over the next 12 months. Taking into account that 72% of the respondents are already using generative AI in some capacity, the spend over the next 12 months will likely be significant.

© Arion Research LLC 2023 - All rights reserved

Generative AI for business use cases is clearly getting widespread attention and use. Using generative AI in business applications can be highly beneficial, with applications ranging from automated customer service and content creation to decision-making support and personalized recommendations. However, there are also several potential risks and challenges associated with its use. Some of the major risks are:

Data privacy and security: AI models are typically trained on large datasets, and businesses often use sensitive customer or company data for this purpose. If not properly managed, this could result in data breaches or violations of privacy regulations.

Bias and fairness: AI models learn from the data they're trained on. If this data contains biases (e.g., in terms of race, gender, age), the AI could perpetuate or even exacerbate these biases in its outputs. This can lead to unfair or discriminatory results, which could harm the business's reputation and potentially lead to legal consequences.

Accuracy and reliability: Generative AI models are not perfect and may generate incorrect or nonsensical outputs. Depending on the use case, this could have serious implications. For example, if an AI model is used to generate financial forecasts, inaccuracies could lead to poor business decisions.

Lack of transparency and interpretability (black box problem): It's often difficult to understand exactly how complex AI models make their decisions. This lack of transparency can make it hard to identify and correct problems, and can also cause issues with trust among users or customers.

Legal and ethical considerations: There are still many unresolved legal and ethical questions surrounding the use of AI. For example, who is responsible if an AI makes a decision that leads to harm? Can AI-generated content be copyrighted? Businesses using AI must navigate these questions.

Dependence on AI: Overreliance on AI systems can make businesses vulnerable to technical glitches or failures. This can be a serious risk if the AI is used for mission-critical tasks.

Misuse of technology: Like any tool, AI can be used for malicious purposes. For example, AI-generated deepfakes (hyper-realistic fake videos or audio) can be used for fraud or disinformation.

Job displacement: As AI systems become more capable, there's a risk that they could displace human workers in certain roles. This can have societal impacts and also affect the morale of remaining employees.

Mitigating these risks requires careful planning and ongoing management. This might involve using robust data privacy and security measures, implementing methods for reducing bias, establishing rigorous testing procedures to ensure accuracy and reliability, and developing strategies for managing the ethical and societal impacts of AI.

Check back over the next couple of weeks for the full report on the survey in which we look at everything from the most prevalent use cases to challenges and risk.